Statistics

This notebook provides a comprehensive overview and examples of the statistical functions available in the fastmath.stats namespace. It covers a wide array of descriptive statistics, measures of spread, quantiles, moments, correlation, distance metrics, contingency table analysis, binary classification metrics, effect sizes, various statistical tests (normality, binomial, t/z, variance, goodness-of-fit, ANOVA, autocorrelation), time series analysis tools (ACF, PACF), data transformations, and histogram generation. Each section introduces the relevant concepts and demonstrates the use of fastmath.stats functions with illustrative datasets (mtcars, iris, winequality).

Datasets

To illustrate statistical functions three dataset will be used:

mtcarsiriswinequality

A fastmath.dev.dataset as ds will be used to access data.

Select the :mpg column from mtcars

(ds/mtcars :mpg)(21 21 22.8 21.4 18.7 18.1 14.3 24.4 22.8 19.2 17.8 16.4 17.3 15.2 10.4 10.4 14.7 32.4 30.4 33.9 21.5 15.5 15.2 13.3 19.2 27.3 26 30.4 15.8 19.7 15 21.4)Select the :mpg column with a row predicate

(ds/mtcars :mpg (fn [row] (and (< (row :mpg) 20.0) (zero? (row :am)))))(18.7 18.1 14.3 19.2 17.8 16.4 17.3 15.2 10.4 10.4 14.7 15.5 15.2 13.3 19.2)Group by the :am column and select :mpg

(ds/by ds/mtcars :am :mpg){1 (21 21 22.8 32.4 30.4 33.9 27.3 26 30.4 15.8 19.7 15 21.4), 0 (21.4 18.7 18.1 14.3 24.4 22.8 19.2 17.8 16.4 17.3 15.2 10.4 10.4 14.7 21.5 15.5 15.2 13.3 19.2)}mtcars

11 attributes of 32 different cars comparison

| name | qsec | cyl | am | gear | disp | wt | drat | hp | mpg | vs | carb |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mazda RX4 | 16.46 | 6 | 1 | 4 | 160 | 2.62 | 3.9 | 110 | 21 | 0 | 4 |

| Mazda RX4 Wag | 17.02 | 6 | 1 | 4 | 160 | 2.875 | 3.9 | 110 | 21 | 0 | 4 |

| Datsun 710 | 18.61 | 4 | 1 | 4 | 108 | 2.32 | 3.85 | 93 | 22.8 | 1 | 1 |

| Hornet 4 Drive | 19.44 | 6 | 0 | 3 | 258 | 3.215 | 3.08 | 110 | 21.4 | 1 | 1 |

| Hornet Sportabout | 17.02 | 8 | 0 | 3 | 360 | 3.44 | 3.15 | 175 | 18.7 | 0 | 2 |

| Valiant | 20.22 | 6 | 0 | 3 | 225 | 3.46 | 2.76 | 105 | 18.1 | 1 | 1 |

| Duster 360 | 15.84 | 8 | 0 | 3 | 360 | 3.57 | 3.21 | 245 | 14.3 | 0 | 4 |

| Merc 240D | 20 | 4 | 0 | 4 | 146.7 | 3.19 | 3.69 | 62 | 24.4 | 1 | 2 |

| Merc 230 | 22.9 | 4 | 0 | 4 | 140.8 | 3.15 | 3.92 | 95 | 22.8 | 1 | 2 |

| Merc 280 | 18.3 | 6 | 0 | 4 | 167.6 | 3.44 | 3.92 | 123 | 19.2 | 1 | 4 |

| Merc 280C | 18.9 | 6 | 0 | 4 | 167.6 | 3.44 | 3.92 | 123 | 17.8 | 1 | 4 |

| Merc 450SE | 17.4 | 8 | 0 | 3 | 275.8 | 4.07 | 3.07 | 180 | 16.4 | 0 | 3 |

| Merc 450SL | 17.6 | 8 | 0 | 3 | 275.8 | 3.73 | 3.07 | 180 | 17.3 | 0 | 3 |

| Merc 450SLC | 18 | 8 | 0 | 3 | 275.8 | 3.78 | 3.07 | 180 | 15.2 | 0 | 3 |

| Cadillac Fleetwood | 17.98 | 8 | 0 | 3 | 472 | 5.25 | 2.93 | 205 | 10.4 | 0 | 4 |

| Lincoln Continental | 17.82 | 8 | 0 | 3 | 460 | 5.424 | 3 | 215 | 10.4 | 0 | 4 |

| Chrysler Imperial | 17.42 | 8 | 0 | 3 | 440 | 5.345 | 3.23 | 230 | 14.7 | 0 | 4 |

| Fiat 128 | 19.47 | 4 | 1 | 4 | 78.7 | 2.2 | 4.08 | 66 | 32.4 | 1 | 1 |

| Honda Civic | 18.52 | 4 | 1 | 4 | 75.7 | 1.615 | 4.93 | 52 | 30.4 | 1 | 2 |

| Toyota Corolla | 19.9 | 4 | 1 | 4 | 71.1 | 1.835 | 4.22 | 65 | 33.9 | 1 | 1 |

| Toyota Corona | 20.01 | 4 | 0 | 3 | 120.1 | 2.465 | 3.7 | 97 | 21.5 | 1 | 1 |

| Dodge Challenger | 16.87 | 8 | 0 | 3 | 318 | 3.52 | 2.76 | 150 | 15.5 | 0 | 2 |

| AMC Javelin | 17.3 | 8 | 0 | 3 | 304 | 3.435 | 3.15 | 150 | 15.2 | 0 | 2 |

| Camaro Z28 | 15.41 | 8 | 0 | 3 | 350 | 3.84 | 3.73 | 245 | 13.3 | 0 | 4 |

| Pontiac Firebird | 17.05 | 8 | 0 | 3 | 400 | 3.845 | 3.08 | 175 | 19.2 | 0 | 2 |

| Fiat X1-9 | 18.9 | 4 | 1 | 4 | 79 | 1.935 | 4.08 | 66 | 27.3 | 1 | 1 |

| Porsche 914-2 | 16.7 | 4 | 1 | 5 | 120.3 | 2.14 | 4.43 | 91 | 26 | 0 | 2 |

| Lotus Europa | 16.9 | 4 | 1 | 5 | 95.1 | 1.513 | 3.77 | 113 | 30.4 | 1 | 2 |

| Ford Pantera L | 14.5 | 8 | 1 | 5 | 351 | 3.17 | 4.22 | 264 | 15.8 | 0 | 4 |

| Ferrari Dino | 15.5 | 6 | 1 | 5 | 145 | 2.77 | 3.62 | 175 | 19.7 | 0 | 6 |

| Maserati Bora | 14.6 | 8 | 1 | 5 | 301 | 3.57 | 3.54 | 335 | 15 | 0 | 8 |

| Volvo 142E | 18.6 | 4 | 1 | 4 | 121 | 2.78 | 4.11 | 109 | 21.4 | 1 | 2 |

iris

Sepal and Petal iris species comparison

| sepal-length | sepal-width | petal-length | petal-width | species |

|---|---|---|---|---|

| 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 4.9 | 3 | 1.4 | 0.2 | setosa |

| 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 5 | 3.6 | 1.4 | 0.2 | setosa |

| 5.4 | 3.9 | 1.7 | 0.4 | setosa |

| 4.6 | 3.4 | 1.4 | 0.3 | setosa |

| 5 | 3.4 | 1.5 | 0.2 | setosa |

| 4.4 | 2.9 | 1.4 | 0.2 | setosa |

| 4.9 | 3.1 | 1.5 | 0.1 | setosa |

| 5.4 | 3.7 | 1.5 | 0.2 | setosa |

| 4.8 | 3.4 | 1.6 | 0.2 | setosa |

| 4.8 | 3 | 1.4 | 0.1 | setosa |

| 4.3 | 3 | 1.1 | 0.1 | setosa |

| 5.8 | 4 | 1.2 | 0.2 | setosa |

| 5.7 | 4.4 | 1.5 | 0.4 | setosa |

| 5.4 | 3.9 | 1.3 | 0.4 | setosa |

| 5.1 | 3.5 | 1.4 | 0.3 | setosa |

| 5.7 | 3.8 | 1.7 | 0.3 | setosa |

| 5.1 | 3.8 | 1.5 | 0.3 | setosa |

| 5.4 | 3.4 | 1.7 | 0.2 | setosa |

| 5.1 | 3.7 | 1.5 | 0.4 | setosa |

| 4.6 | 3.6 | 1 | 0.2 | setosa |

| 5.1 | 3.3 | 1.7 | 0.5 | setosa |

| 4.8 | 3.4 | 1.9 | 0.2 | setosa |

| 5 | 3 | 1.6 | 0.2 | setosa |

| 5 | 3.4 | 1.6 | 0.4 | setosa |

| 5.2 | 3.5 | 1.5 | 0.2 | setosa |

| 5.2 | 3.4 | 1.4 | 0.2 | setosa |

| 4.7 | 3.2 | 1.6 | 0.2 | setosa |

| 4.8 | 3.1 | 1.6 | 0.2 | setosa |

| 5.4 | 3.4 | 1.5 | 0.4 | setosa |

| 5.2 | 4.1 | 1.5 | 0.1 | setosa |

| 5.5 | 4.2 | 1.4 | 0.2 | setosa |

| 4.9 | 3.1 | 1.5 | 0.1 | setosa |

| 5 | 3.2 | 1.2 | 0.2 | setosa |

| 5.5 | 3.5 | 1.3 | 0.2 | setosa |

| 4.9 | 3.1 | 1.5 | 0.1 | setosa |

| 4.4 | 3 | 1.3 | 0.2 | setosa |

| 5.1 | 3.4 | 1.5 | 0.2 | setosa |

| 5 | 3.5 | 1.3 | 0.3 | setosa |

| 4.5 | 2.3 | 1.3 | 0.3 | setosa |

| 4.4 | 3.2 | 1.3 | 0.2 | setosa |

| 5 | 3.5 | 1.6 | 0.6 | setosa |

| 5.1 | 3.8 | 1.9 | 0.4 | setosa |

| 4.8 | 3 | 1.4 | 0.3 | setosa |

| 5.1 | 3.8 | 1.6 | 0.2 | setosa |

| 4.6 | 3.2 | 1.4 | 0.2 | setosa |

| 5.3 | 3.7 | 1.5 | 0.2 | setosa |

| 5 | 3.3 | 1.4 | 0.2 | setosa |

| 7 | 3.2 | 4.7 | 1.4 | versicolor |

| 6.4 | 3.2 | 4.5 | 1.5 | versicolor |

| 6.9 | 3.1 | 4.9 | 1.5 | versicolor |

| 5.5 | 2.3 | 4 | 1.3 | versicolor |

| 6.5 | 2.8 | 4.6 | 1.5 | versicolor |

| 5.7 | 2.8 | 4.5 | 1.3 | versicolor |

| 6.3 | 3.3 | 4.7 | 1.6 | versicolor |

| 4.9 | 2.4 | 3.3 | 1 | versicolor |

| 6.6 | 2.9 | 4.6 | 1.3 | versicolor |

| 5.2 | 2.7 | 3.9 | 1.4 | versicolor |

| 5 | 2 | 3.5 | 1 | versicolor |

| 5.9 | 3 | 4.2 | 1.5 | versicolor |

| 6 | 2.2 | 4 | 1 | versicolor |

| 6.1 | 2.9 | 4.7 | 1.4 | versicolor |

| 5.6 | 2.9 | 3.6 | 1.3 | versicolor |

| 6.7 | 3.1 | 4.4 | 1.4 | versicolor |

| 5.6 | 3 | 4.5 | 1.5 | versicolor |

| 5.8 | 2.7 | 4.1 | 1 | versicolor |

| 6.2 | 2.2 | 4.5 | 1.5 | versicolor |

| 5.6 | 2.5 | 3.9 | 1.1 | versicolor |

| 5.9 | 3.2 | 4.8 | 1.8 | versicolor |

| 6.1 | 2.8 | 4 | 1.3 | versicolor |

| 6.3 | 2.5 | 4.9 | 1.5 | versicolor |

| 6.1 | 2.8 | 4.7 | 1.2 | versicolor |

| 6.4 | 2.9 | 4.3 | 1.3 | versicolor |

| 6.6 | 3 | 4.4 | 1.4 | versicolor |

| 6.8 | 2.8 | 4.8 | 1.4 | versicolor |

| 6.7 | 3 | 5 | 1.7 | versicolor |

| 6 | 2.9 | 4.5 | 1.5 | versicolor |

| 5.7 | 2.6 | 3.5 | 1 | versicolor |

| 5.5 | 2.4 | 3.8 | 1.1 | versicolor |

| 5.5 | 2.4 | 3.7 | 1 | versicolor |

| 5.8 | 2.7 | 3.9 | 1.2 | versicolor |

| 6 | 2.7 | 5.1 | 1.6 | versicolor |

| 5.4 | 3 | 4.5 | 1.5 | versicolor |

| 6 | 3.4 | 4.5 | 1.6 | versicolor |

| 6.7 | 3.1 | 4.7 | 1.5 | versicolor |

| 6.3 | 2.3 | 4.4 | 1.3 | versicolor |

| 5.6 | 3 | 4.1 | 1.3 | versicolor |

| 5.5 | 2.5 | 4 | 1.3 | versicolor |

| 5.5 | 2.6 | 4.4 | 1.2 | versicolor |

| 6.1 | 3 | 4.6 | 1.4 | versicolor |

| 5.8 | 2.6 | 4 | 1.2 | versicolor |

| 5 | 2.3 | 3.3 | 1 | versicolor |

| 5.6 | 2.7 | 4.2 | 1.3 | versicolor |

| 5.7 | 3 | 4.2 | 1.2 | versicolor |

| 5.7 | 2.9 | 4.2 | 1.3 | versicolor |

| 6.2 | 2.9 | 4.3 | 1.3 | versicolor |

| 5.1 | 2.5 | 3 | 1.1 | versicolor |

| 5.7 | 2.8 | 4.1 | 1.3 | versicolor |

| 6.3 | 3.3 | 6 | 2.5 | virginica |

| 5.8 | 2.7 | 5.1 | 1.9 | virginica |

| 7.1 | 3 | 5.9 | 2.1 | virginica |

| 6.3 | 2.9 | 5.6 | 1.8 | virginica |

| 6.5 | 3 | 5.8 | 2.2 | virginica |

| 7.6 | 3 | 6.6 | 2.1 | virginica |

| 4.9 | 2.5 | 4.5 | 1.7 | virginica |

| 7.3 | 2.9 | 6.3 | 1.8 | virginica |

| 6.7 | 2.5 | 5.8 | 1.8 | virginica |

| 7.2 | 3.6 | 6.1 | 2.5 | virginica |

| 6.5 | 3.2 | 5.1 | 2 | virginica |

| 6.4 | 2.7 | 5.3 | 1.9 | virginica |

| 6.8 | 3 | 5.5 | 2.1 | virginica |

| 5.7 | 2.5 | 5 | 2 | virginica |

| 5.8 | 2.8 | 5.1 | 2.4 | virginica |

| 6.4 | 3.2 | 5.3 | 2.3 | virginica |

| 6.5 | 3 | 5.5 | 1.8 | virginica |

| 7.7 | 3.8 | 6.7 | 2.2 | virginica |

| 7.7 | 2.6 | 6.9 | 2.3 | virginica |

| 6 | 2.2 | 5 | 1.5 | virginica |

| 6.9 | 3.2 | 5.7 | 2.3 | virginica |

| 5.6 | 2.8 | 4.9 | 2 | virginica |

| 7.7 | 2.8 | 6.7 | 2 | virginica |

| 6.3 | 2.7 | 4.9 | 1.8 | virginica |

| 6.7 | 3.3 | 5.7 | 2.1 | virginica |

| 7.2 | 3.2 | 6 | 1.8 | virginica |

| 6.2 | 2.8 | 4.8 | 1.8 | virginica |

| 6.1 | 3 | 4.9 | 1.8 | virginica |

| 6.4 | 2.8 | 5.6 | 2.1 | virginica |

| 7.2 | 3 | 5.8 | 1.6 | virginica |

| 7.4 | 2.8 | 6.1 | 1.9 | virginica |

| 7.9 | 3.8 | 6.4 | 2 | virginica |

| 6.4 | 2.8 | 5.6 | 2.2 | virginica |

| 6.3 | 2.8 | 5.1 | 1.5 | virginica |

| 6.1 | 2.6 | 5.6 | 1.4 | virginica |

| 7.7 | 3 | 6.1 | 2.3 | virginica |

| 6.3 | 3.4 | 5.6 | 2.4 | virginica |

| 6.4 | 3.1 | 5.5 | 1.8 | virginica |

| 6 | 3 | 4.8 | 1.8 | virginica |

| 6.9 | 3.1 | 5.4 | 2.1 | virginica |

| 6.7 | 3.1 | 5.6 | 2.4 | virginica |

| 6.9 | 3.1 | 5.1 | 2.3 | virginica |

| 5.8 | 2.7 | 5.1 | 1.9 | virginica |

| 6.8 | 3.2 | 5.9 | 2.3 | virginica |

| 6.7 | 3.3 | 5.7 | 2.5 | virginica |

| 6.7 | 3 | 5.2 | 2.3 | virginica |

| 6.3 | 2.5 | 5 | 1.9 | virginica |

| 6.5 | 3 | 5.2 | 2 | virginica |

| 6.2 | 3.4 | 5.4 | 2.3 | virginica |

| 5.9 | 3 | 5.1 | 1.8 | virginica |

winequality

White Portugese Vinho Verde wine consisting set of quality parameters (physicochemical and sensory).

Data

Let’s bind some common columns to a global vars

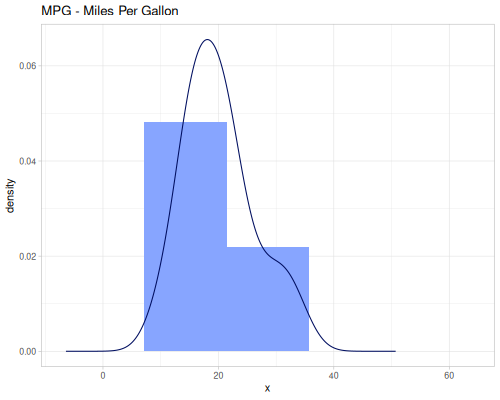

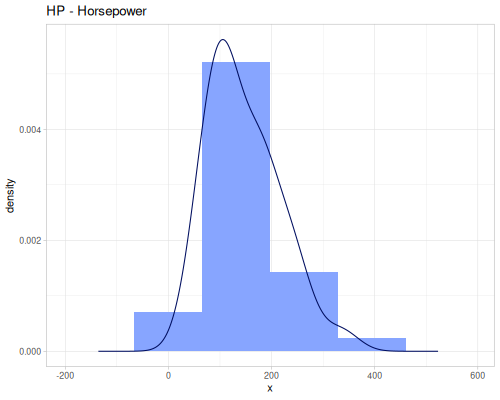

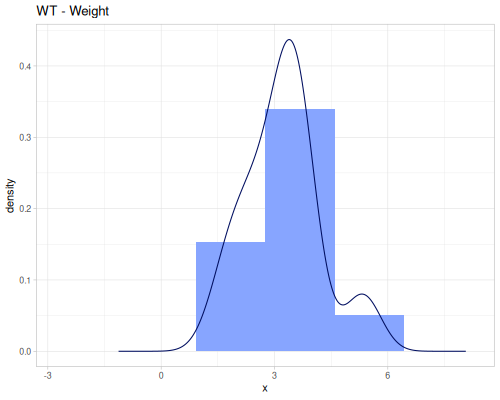

mtcars

Miles per US gallon

(def mpg (ds/mtcars :mpg))Horsepower

(def hp (ds/mtcars :hp))Weight of the car

(def wt (ds/mtcars :wt)) |

|

|

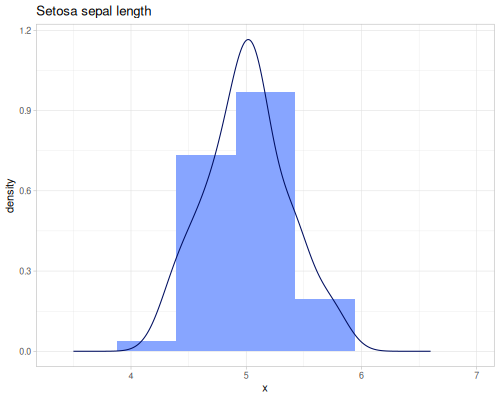

iris

Sepal lengths

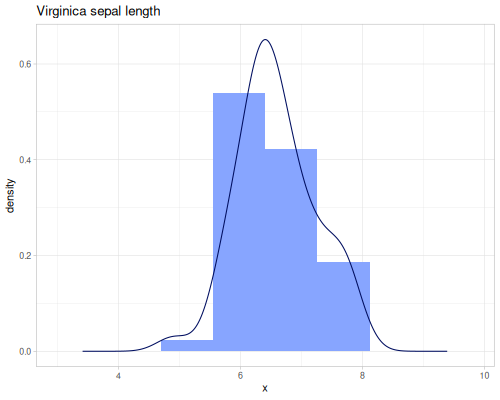

(def sepal-lengths (ds/by ds/iris :species :sepal-length))(def setosa-sepal-length (sepal-lengths :setosa))(def virginica-sepal-length (sepal-lengths :virginica)) |

|

Sepal widths

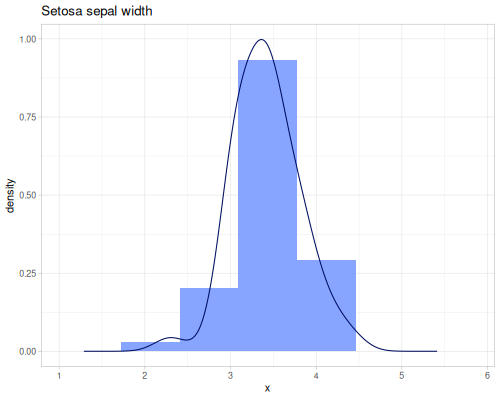

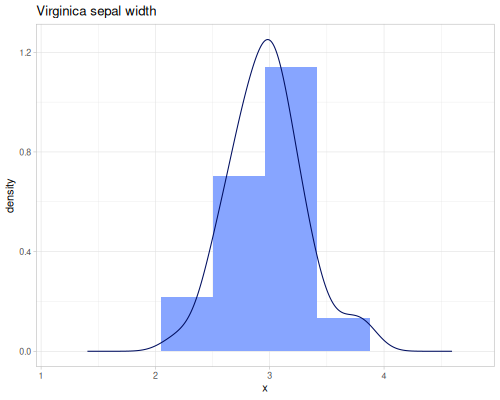

(def sepal-widths (ds/by ds/iris :species :sepal-width))(def setosa-sepal-width (sepal-widths :setosa))(def virginica-sepal-width (sepal-widths :virginica)) |

|

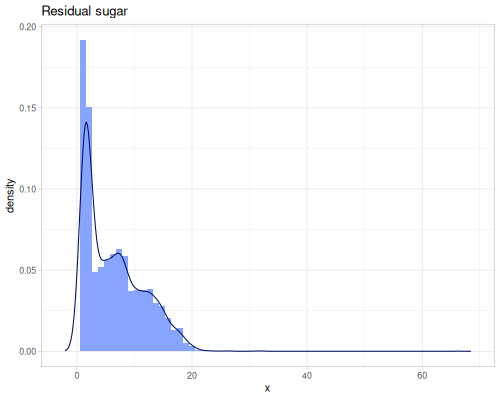

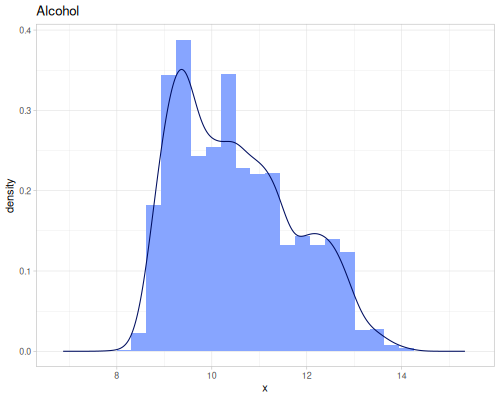

winequality

(def residual-sugar (ds/winequality "residual sugar"))(def alcohol (ds/winequality "alcohol")) |

|

Basic Descriptive Statistics

General summary statistics for describing the center and range of data.

minimum,maximumsummeangeomean,harmean,powmeanmode,modeswmode,wmodesstats-map

Basic

This section covers fundamental descriptive statistics including finding the smallest (minimum) and largest (maximum) values, and calculating the total (sum).

(stats/minimum mpg) ;; => 10.4

(stats/maximum mpg) ;; => 33.9

(stats/sum mpg) ;; => 642.8999999999999Compensated summation can be used to reduce numerical error. There are three algorithms implemented:

:kahan: The classic algorithm using a single correction variable to reduce numerical error.:neumayer: An improvement on Kahan, also using one correction variable but often providing better accuracy.:klein: A higher-order method using two correction variables, typically offering the highest accuracy at a slight computational cost.

As you can see below, all compensated summation give accurate result for mpg data.

(stats/sum mpg) ;; => 642.8999999999999

(stats/sum mpg :kahan) ;; => 642.9

(stats/sum mpg :neumayer) ;; => 642.9

(stats/sum mpg :klein) ;; => 642.9But here is the example in which normal summation and :kahan fails.

(stats/sum [1.0 1.0E100 1.0 -1.0E100]) ;; => 0.0

(stats/sum [1.0 1.0E100 1.0 -1.0E100] :kahan) ;; => 0.0

(stats/sum [1.0 1.0E100 1.0 -1.0E100] :neumayer) ;; => 2.0

(stats/sum [1.0 1.0E100 1.0 -1.0E100] :klein) ;; => 2.0Mean

The mean is a measure of central tendency. fastmath.stats provides several types of means:

- Arithmetic Mean (

mean): The sum of values divided by their count. It’s the most common type of average.

\[\mu = \frac{1}{n} \sum_{i=1}^{n} x_i\]

- Geometric Mean (

geomean): The n-th root of the product of n numbers. Suitable for averaging ratios, growth rates, or values that are multiplicative in nature. Requires all values to be positive. It’s less affected by extreme large values than the arithmetic mean, but more affected by extreme small values.

\[G = \left(\prod_{i=1}^{n} x_i\right)^{1/n} = \exp\left(\frac{1}{n}\sum_{i=1}^{n} \ln(x_i)\right)\]

- Harmonic Mean (

harmean): The reciprocal of the arithmetic mean of the reciprocals of the observations. Appropriate for averaging rates (e.g., speeds). It is sensitive to small values and requires all values to be positive and non-zero.

\[H = \frac{n}{\sum_{i=1}^{n} \frac{1}{x_i}}\]

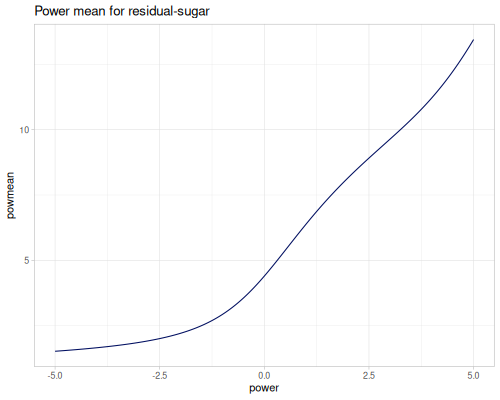

- Power Mean (

powmean): Also known as the generalized mean or Hölder mean. It generalizes the arithmetic, geometric, and harmonic means. Defined by a power parameter \(p\).

\[M_p = \left(\frac{1}{n} \sum_{i=1}^{n} x_i^p\right)^{1/p} \text{ for } (p \neq 0)\]

Special cases:

- \(p \to 0\): Geometric Mean

- \(p = 1\): Arithmetic Mean

- \(p = -1\): Harmonic Mean

- \(p = 2\): Root Mean Square (RMS)

- \(p \to \infty\): Maximum value

- \(p \to -\infty\): Minimum value

The behavior depends on \(p\): higher \(p\) gives more weight to larger values, lower \(p\) gives more weight to smaller values.

(stats/mean residual-sugar) ;; => 6.391414863209474

(stats/geomean residual-sugar) ;; => 4.397022390150047

(stats/harmean residual-sugar) ;; => 2.9295723194226815

(stats/powmean residual-sugar ##-Inf) ;; => 0.6

(stats/powmean residual-sugar -4.5) ;; => 1.5790401256763393

(stats/powmean residual-sugar -1.0) ;; => 2.9295723194226815

(stats/powmean residual-sugar 0.0) ;; => 4.397022390150047

(stats/powmean residual-sugar 1.0) ;; => 6.391414863209474

(stats/powmean residual-sugar 4.5) ;; => 12.228524559326921

(stats/powmean residual-sugar ##Inf) ;; => 65.8All values of power mean for residual-sugar data and range of the power from -5 to 5.

Weighted

Every mean function accepts optional weights vector. Formulas for weighted means are as follows.

- Arithmetic Mean (

mean):

\[\mu_w = \frac{\sum_{i=1}^{n} w_i x_i}{\sum_{i=1}^{n} w_i}\]

- Geometric Mean (

geomean):

\[G_w = \left(\prod_{i=1}^{n} x_i^{w_i}\right)^{1/\sum w_i} = \exp\left(\frac{\sum_{i=1}^{n} w_i \ln(x_i)}{\sum_{i=1}^{n} w_i}\right)\]

- Harmonic Mean (

harmean):

\[H_w = \frac{\sum_{i=1}^{n} w_i}{\sum_{i=1}^{n} \frac{w_i}{x_i}}\]

- Power Mean (

powmean):

\[M_{w,p} = \left(\frac{\sum_{i=1}^{n} w_i x_i^p}{\sum_{i=1}^{n} w_i}\right)^{1/p} \text{ for } (p \neq 0)\]

Let’s calculate mean of hp (horsepower) weighted by wt (car weight).

(stats/mean hp) ;; => 146.6875

(stats/mean hp wt) ;; => 159.99440515968607

(stats/geomean hp) ;; => 131.88367883954564

(stats/geomean hp wt) ;; => 145.7870847521906

(stats/harmean hp) ;; => 118.2288915187372

(stats/harmean hp wt) ;; => 131.56286792601395

(stats/powmean hp -4.5) ;; => 87.4433284945516

(stats/powmean hp wt -4.5) ;; => 95.2308358812506

(stats/powmean hp 4.5) ;; => 193.97486455878905

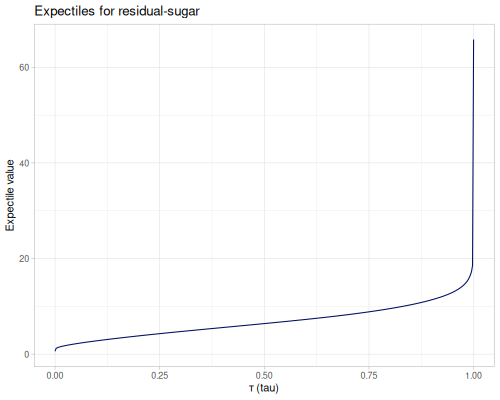

(stats/powmean hp wt 4.5) ;; => 201.37510347727357Expectile

The expectile is a measure of location, related to both the [mean] and [quantile]. For a given level τ (tau, a value between 0 and 1), the τ-th expectile is the value t that minimizes an asymmetrically weighted sum of squared differences from t. This is distinct from quantiles, which minimize an asymmetrically weighted sum of absolute differences.

A key property of expectiles is that the 0.5-expectile is identical to the arithmetic [mean]. As τ varies from 0 to 1, expectiles span a range of values, typically from the minimum (τ=0) to the maximum (τ=1) of the dataset. Like the mean, expectiles are influenced by the magnitude of all data points, making them generally more sensitive to outliers than corresponding quantiles (e.g., the median).

(stats/expectile residual-sugar 0.1) ;; => 2.794600951101003

(stats/expectile residual-sugar 0.5) ;; => 6.391414863209474

(stats/mean residual-sugar) ;; => 6.391414863209474

(stats/expectile residual-sugar 0.9) ;; => 11.368419035291927

(stats/expectile hp wt 0.25) ;; => 130.66198036981677Plotting expectiles for residual-sugar data across the range of τ from 0.0 to 1.0.

Mode

The mode is the value that appears most frequently in a dataset.

For numeric data, mode returns the first mode encountered, while modes returns a sequence of all modes (in increasing order for the default method).

Let’s see that mode returns elements with the highest frequency of hp (showing only first 10 values)

^:kind/hidden

(->> (frequencies hp)

(sort-by second >)

(take 10))| value | frequency |

|---|---|

| 110 | 3 |

| 175 | 3 |

| 180 | 3 |

| 150 | 2 |

| 66 | 2 |

| 245 | 2 |

| 123 | 2 |

| 65 | 1 |

| 62 | 1 |

| 205 | 1 |

(stats/mode hp) ;; => 110.0

(stats/modes hp) ;; => (110.0 175.0 180.0)When dealing with data potentially from a continuous distribution, these functions can estimate the mode using different methods:

:histogram: Mode(s) based on the peak(s) of a histogram.:pearson: Mode estimated using Pearson’s second skewness coefficient (mode ≈ 3 * median - 2 * mean).:kde: Mode(s) based on Kernel Density Estimation, finding original data points with the highest estimated density.- The default method finds the exact most frequent value(s), suitable for discrete data.

(stats/mode residual-sugar) ;; => 1.2

(stats/mode residual-sugar :histogram) ;; => 1.700695134061569

(stats/mode residual-sugar :pearson) ;; => 2.8171702735810538

(stats/mode residual-sugar :kde) ;; => 1.65For weighted data, or data of any type (not just numeric), use wmode and wmodes. wmode returns the first weighted mode (the one with the highest total weight encountered first), and wmodes returns all values that share the highest total weight. If weights are omitted, they default to 1.0 for each value, effectively calculating unweighted modes for any data type.

(stats/wmode [:a :b :c :d] [1 2.5 1 2.5]) ;; => :b

(stats/wmodes [:a :b :c :d] [1 2.5 1 2.5]) ;; => (:b :d)Stats

The stats-map function provides a comprehensive summary of descriptive statistics for a given dataset. It returns a map where keys are statistic names (as keywords) and values are their calculated measures. This function is a convenient way to get a quick overview of the data’s characteristics.

The resulting map contains the following key-value pairs:

:Size- The number of data points in the sequence.:Min- The smallest value in the sequence (see [minimum]).:Max- The largest value in the sequence (see [maximum]).:Range- The difference between the maximum and minimum values.:Mean- The arithmetic average of the values (see [mean]).:Median- The middle value of the sorted sequence (see [median]).:Mode- The most frequently occurring value(s) (see [mode]).:Q1- The first quartile (25th percentile) of the data (see [percentile]).:Q3- The third quartile (75th percentile) of the data (see [percentile]).:Total- The sum of all values in the sequence (see [sum]).:SD- The sample standard deviation, a measure of data dispersion around the mean.:Variance- The sample variance, the square of the standard deviation.:MAD- The Median Absolute Deviation, a robust measure of variability (see [median-absolute-deviation]).:SEM- The Standard Error of the Mean, an estimate of the standard deviation of the sample mean.:LAV- The Lower Adjacent Value, the smallest observation that is not an outlier (see [adjacent-values]).:UAV- The Upper Adjacent Value, the largest observation that is not an outlier (see [adjacent-values]).:IQR- The Interquartile Range, the difference between Q3 and Q1.:LOF- The Lower Outer Fence, a threshold for identifying extreme low outliers (Q1 - 3.0 * IQR).:UOF- The Upper Outer Fence, a threshold for identifying extreme high outliers (Q3 + 3.0 * IQR).:LIF- The Lower Inner Fence, a threshold for identifying mild low outliers (Q1 - 1.5 * IQR).:UIF- The Upper Inner Fence, a threshold for identifying mild high outliers (Q3 + 1.5 * IQR).:Outliers- A list of data points considered outliers (values outside the inner fences, see [outliers]).:Kurtosis- A measure of the “tailedness” or “peakedness” of the distribution (see [kurtosis]).:Skewness- A measure of the asymmetry of the distribution (see [skewness]).

(stats/stats-map residual-sugar){:IQR 8.200000000000001,

:Kurtosis 3.4698201025634363,

:LAV 0.6,

:LIF -10.600000000000001,

:LOF -22.900000000000002,

:MAD 3.6,

:Max 65.8,

:Mean 6.391414863209486,

:Median 5.2,

:Min 0.6,

:Mode 1.2,

:Outliers (22.6 23.5 26.05 26.05 31.6 31.6 65.8),

:Q1 1.7,

:Q3 9.9,

:Range 65.2,

:SD 5.072057784014863,

:SEM 0.07247276021182479,

:Size 4898,

:Skewness 1.0770937564241123,

:Total 31305.150000000063,

:UAV 22.0,

:UIF 22.200000000000003,

:UOF 34.5,

:Variance 25.72577016438576}Quantiles and Percentiles

Statistics related to points dividing the distribution of data.

percentile,percentilesquantile,quantileswquantile,wquantilesmedian,median-3wmedian

Quantiles and percentiles are statistics that divide the range of a probability distribution into continuous intervals with equal probabilities, or divide the observations in a sample in the same way.

fastmath.stats provides several functions to calculate these measures:

percentile: Calculates the p-th percentile (a value from 0 to 100) of a sequence.percentiles: Calculates multiple percentiles for a sequence.quantile: Calculates the q-th quantile (a value from 0.0 to 1.0) of a sequence. This is equivalent to(percentile vs (* q 100.0)).quantiles: Calculates multiple quantiles for a sequence.median: Calculates the median (0.5 quantile or 50th percentile) of a sequence.median-3: A specialized function that calculates the median of exactly three numbers.

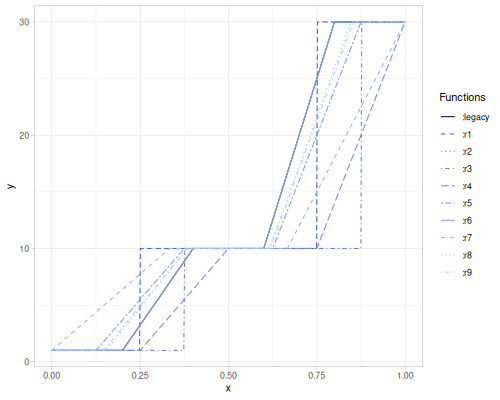

All percentile, quantiles, quantile, quantiles, and median functions accept an optional estimation-strategy keyword. This parameter determines how the quantile is estimated, particularly how interpolation is handled when the desired quantile falls between data points in the sorted sequence.

(stats/quantile mpg 0.25) ;; => 15.274999999999999

(stats/quantiles mpg [0.1 0.25 0.5 0.75 0.9]) ;; => [13.600000000000001 15.274999999999999 19.2 22.8 30.4]

(stats/percentile mpg 50.0) ;; => 19.2

(stats/percentiles residual-sugar [10 25 50 75 90]) ;; => [1.2 1.7 5.2 9.9 14.0]

(stats/median mpg) ;; => 19.2

(stats/median-3 15 5 10) ;; => 10.0The available estimation-strategy values are :legacy (default), :r1, :r2, :r3, :r4, :r5, :r6, :r7, :r8 and :r9. Formulas for all of them can be found on this Wikipedia article. :legacy uses estimate of the form: \(Q_p = x_{\lceil p(N+1) - 1/2 \rceil}\)

The plot below illustrates the differences between these estimation strategies for the sample vs = [1 10 10 30].

(def vs [1 10 10 30])

(stats/quantiles vs [0.1 0.25 0.5 0.75 0.9] :legacy) ;; => [1.0 3.25 10.0 25.0 30.0]

(stats/quantiles vs [0.1 0.25 0.5 0.75 0.9] :r1) ;; => [1.0 1.0 10.0 10.0 30.0]

(stats/quantiles vs [0.1 0.25 0.5 0.75 0.9] :r2) ;; => [1.0 5.5 10.0 20.0 30.0]

(stats/quantiles vs [0.1 0.25 0.5 0.75 0.9] :r3) ;; => [1.0 1.0 10.0 10.0 30.0]

(stats/quantiles vs [0.1 0.25 0.5 0.75 0.9] :r4) ;; => [1.0 1.0 10.0 10.0 22.0]

(stats/quantiles vs [0.1 0.25 0.5 0.75 0.9] :r5) ;; => [1.0 5.5 10.0 20.0 30.0]

(stats/quantiles vs [0.1 0.25 0.5 0.75 0.9] :r6) ;; => [1.0 3.25 10.0 25.0 30.0]

(stats/quantiles vs [0.1 0.25 0.5 0.75 0.9] :r7) ;; => [3.7 7.75 10.0 15.0 24.000000000000004]

(stats/quantiles vs [0.1 0.25 0.5 0.75 0.9] :r8) ;; => [1.0 4.749999999999998 10.0 21.66666666666667 30.0]

(stats/quantiles vs [0.1 0.25 0.5 0.75 0.9] :r9) ;; => [1.0 4.9375 10.0 21.25 30.0]Weighted

There are also functions to calculate weighted quantiles and medians. These are useful when individual data points have different levels of importance or contribution.

wquantile: Calculates the q-th weighted quantile for a sequencevswith correspondingweights.wquantiles: Calculates multiple weighted quantiles for a sequencevswithweights.wmedian: Calculates the weighted median (0.5 weighted quantile) forvswithweights.

All these functions accept an optional method keyword argument that specifies the interpolation strategy when a quantile falls between points in the weighted empirical cumulative distribution function (ECDF). The available methods are:

:linear(Default): Performs linear interpolation between the data values corresponding to the cumulative weights surrounding the target quantile.:step: Uses a step function (specifically, step-before interpolation) based on the weighted ECDF. The result is the data value whose cumulative weight range includes the target quantile.:average: Computes the average of the step-before and step-after interpolation methods. This can be useful when a quantile corresponds exactly to a cumulative weight boundary.

Let’s define a sample dataset and weights:

(def sample-data [10 15 30 50 100])(def sample-weights [1 2 5 1 1])(stats/wquantile sample-data sample-weights 0.25) ;; => 13.75

(stats/wquantile sample-data sample-weights 0.5) ;; => 21.0

(stats/wmedian sample-data sample-weights) ;; => 21.0

(stats/wquantile sample-data sample-weights 0.75) ;; => 28.5

(stats/wmedian sample-data sample-weights :linear) ;; => 21.0

(stats/wmedian sample-data sample-weights :step) ;; => 30.0

(stats/wmedian sample-data sample-weights :average) ;; => 22.5

(stats/wquantiles sample-data sample-weights [0.2 0.5 0.8]) ;; => [12.5 21.0 30.0]

(stats/wquantiles sample-data sample-weights [0.2 0.5 0.8] :step) ;; => [15.0 30.0 30.0]

(stats/wquantiles sample-data sample-weights [0.2 0.5 0.8] :average) ;; => [12.5 22.5 30.0]Using mpg data and wt (car weight) as weights:

(stats/wmedian mpg wt) ;; => 17.702906976744185

(stats/wquantile mpg wt 0.25) ;; => 14.894033613445378

(stats/wquantiles mpg wt [0.1 0.25 0.5 0.75 0.9] :average) ;; => [10.4 14.85 17.55 21.2 25.2]When weights are equal to 1.0, then:

:linearmethod is the same as:r4estimation strategy inquantiles:stepis the same as:r1:averagehas no corresponding strategy

(stats/quantiles mpg [0.1 0.25 0.5 0.75 0.9] :r4) ;; => [13.5 15.2 19.2 22.8 29.78]

(stats/wquantiles mpg (repeat (count mpg) 1.0) [0.1 0.25 0.5 0.75 0.9]) ;; => [13.5 15.2 19.2 22.8 29.78]

(stats/quantiles mpg [0.1 0.25 0.5 0.75 0.9] :r1) ;; => [14.3 15.2 19.2 22.8 30.4]

(stats/wquantiles mpg (repeat (count mpg) 1.0) [0.1 0.25 0.5 0.75 0.9] :step) ;; => [14.3 15.2 19.2 22.8 30.4]

(stats/wquantiles mpg (repeat (count mpg) 1.0) [0.1 0.25 0.5 0.75 0.9] :average) ;; => [13.8 15.2 19.2 22.8 28.85]Measures of Dispersion/Deviation

Statistics describing the spread or variability of data.

variance,population-variancestddev,population-stddevwvariance,population-wvariancewstddev,population-wstddevpooled-variance,pooled-stddevvariation,l-variationmean-absolute-deviationmedian-absolute-deviation,madpooled-madsem

Variance and standard deviation

Variance and standard deviation are fundamental measures of the dispersion or spread of a dataset around its mean.

Variance quantifies the average squared difference of each data point from the mean. A higher variance indicates that the data points are more spread out, while a lower variance indicates they are clustered closer to the mean.

Standard Deviation is the square root of the variance. It is expressed in the same units as the data, making it more interpretable than variance as a measure of spread.

Sample Variance (

variance) and Sample Standard Deviation (stddev): These are estimates of the population variance and standard deviation, calculated from a sample of data. They use a denominator of \(N-1\) (Bessel’s correction) to provide an unbiased estimate of the population variance.

\[s^2 = \frac{\sum_{i=1}^{n} (x_i - \bar{x})^2}{n-1}\]

\[s = \sqrt{s^2}\]

Both functions can optionally accept a pre-calculated mean (mu) as a second argument.

- Population Variance (

population-variance) and Population Standard Deviation (population-stddev): These are used when the data represents the entire population of interest, or when a biased estimate (maximum likelihood estimate) from a sample is desired. They use a denominator of \(N\).

\[\sigma^2 = \frac{\sum_{i=1}^{N} (x_i - \mu)^2}{N}\]

\[\sigma = \sqrt{\sigma^2}\]

These also accept an optional pre-calculated population mean (mu).

- Weighted Variance (

wvariance,population-wvariance) and Weighted Standard Deviation (wstddev,population-wstddev): These calculate variance and standard deviation when each data point has an associated weight. For weighted sample variance (unbiased form, where \(w_i\) are weights):

\[\bar{x}_w = \frac{\sum w_i x_i}{\sum w_i}\]

\[s_w^2 = \frac{\sum w_i (x_i - \bar{x}_w)^2}{(\sum w_i) - 1}\]

For weighted population variance:

\[\sigma_w^2 = \frac{\sum w_i (x_i - \bar{x}_w)^2}{\sum w_i}\]

Weighted standard deviations are the square roots of their respective variances.

- Pooled Variance (

pooled-variance) and Pooled Standard Deviation (pooled-stddev): These are used to estimate a common variance when data comes from several groups that are assumed to have the same population variance. Following methods can be used (where \(k\) is the number of groups, each with \(n_i\) number of observations and sample variance \(s_i^2\)):unbiased(default) \[s_p^2 = \frac{\sum_{i=1}^{k} (n_i-1)s_i^2}{\sum_{i=1}^{k} n_i - k}\]:biased\[s_p^2 = \frac{\sum_{i=1}^{k} (n_i-1)s_i^2}{\sum_{i=1}^{k} n_i}\]:avg- simple average of group variances. \[s_p^2 = \frac{\sum_{i=1}^{k} s_i^2}{k}\]

Pooled standard deviation is \(\sqrt{s_p^2}\).

(stats/variance mpg) ;; => 36.32410282258065

(stats/stddev mpg) ;; => 6.026948052089105

(stats/population-variance mpg) ;; => 35.188974609375

(stats/population-stddev mpg) ;; => 5.932029552301219

(stats/variance mpg (stats/mean mpg)) ;; => 36.32410282258065

(stats/population-variance mpg (stats/mean mpg)) ;; => 35.188974609375Weighted variance and standard deviation

(stats/wvariance hp wt) ;; => 4477.350800154701

(stats/wstddev hp wt) ;; => 66.91300919966685

(stats/population-wvariance hp wt) ;; => 4433.861107869416

(stats/population-wstddev hp wt) ;; => 66.58724433305088Pooled variance and standard deviation

(stats/pooled-variance [setosa-sepal-length virginica-sepal-length]) ;; => 0.264295918367347

(stats/pooled-stddev [setosa-sepal-length virginica-sepal-length]) ;; => 0.5140971876672221

(stats/pooled-variance [setosa-sepal-length virginica-sepal-length] :biased) ;; => 0.2590100000000001

(stats/pooled-variance [setosa-sepal-length virginica-sepal-length] :avg) ;; => 0.264295918367347Beyond variance and standard deviation, we have three additional functions:

- Coefficient of Variation (

variation): This is a standardized measure of dispersion, calculated as the ratio of the standard deviation \(s\) to the mean \(\bar{x}\).

\[CV = \frac{s}{\bar{x}}\]

The CV is unitless, making it useful for comparing the variability of datasets with different means or units. It’s most meaningful for data measured on a ratio scale (i.e., with a true zero point) and where all values are positive.

- Standard Error of the Mean (

sem): The SEM estimates the standard deviation of the sample mean if you were to draw multiple samples from the same population. It indicates how precisely the sample mean estimates the true population mean.

\[SEM = \frac{s}{\sqrt{n}}\]

where \(s\) is the sample standard deviation and \(n\) is the sample size. A smaller SEM suggests a more precise estimate of the population mean.

- L-variation (

l-variation): Calculates the coefficient of L-variation. This is a dimensionless measure of dispersion, analogous to the coefficient of variation.

\[\tau_2 = \lambda_2 / \lambda_1\]

(stats/variation mpg) ;; => 0.29998808160966145

(stats/variation residual-sugar) ;; => 0.7935735502339006

(stats/l-variation mpg) ;; => 0.1691378280874466

(stats/l-variation residual-sugar) ;; => 0.43403938821477966

(stats/sem mpg) ;; => 1.0654239593728148

(stats/sem residual-sugar) ;; => 0.07247276021182479MAD

MAD typically refers to Median Absolute Deviation, a robust measure of statistical dispersion. fastmath.stats also provides the Mean Absolute Deviation.

- Median Absolute Deviation (

median-absolute-deviationormad): This is a robust measure of the variability of a univariate sample. It is defined as the median of the absolute deviations from the data’s median.

\[MAD = \text{median}(|X_i - \text{median}(X)|)\]

If a specific center \(c\) is provided, it’s \(MAD_c = \text{median}(|X_i - c|)\). Also, different estimation strategies can be used, see [median] MAD is less sensitive to outliers than the standard deviation.

- Mean Absolute Deviation (

mean-absolute-deviation): This measures variability as the average of the absolute deviations from a central point, typically the data’s mean.

\[MeanAD = \frac{1}{n} \sum_{i=1}^{n} |X_i - \text{mean}(X)|\]

If a specific center \(c\) is provided, it’s \(MeanAD_c = \frac{1}{n} \sum_{i=1}^{n} |X_i - c|\). MeanAD is more sensitive to outliers than MAD but less sensitive than the standard deviation.

- Pooled MAD (

pooled-mad): This function calculates a pooled estimate of the Median Absolute Deviation when data comes from several groups. For each group \(i\), absolute deviations from its median \(M_i\) are calculated: \(Y_{ij} = |X_{ij} - M_i|\). The pooled MAD is then the median of all such \(Y_{ij}\) values, scaled by a constantconst(which defaults to approximately 1.4826, to make it comparable to the standard deviation for normal data).

\[PooledMAD = \text{const} \cdot \text{median}(\{Y_{ij} \mid \text{for all groups } i \text{ and observations } j \text{ in group } i\})\]

(stats/mad mpg) ;; => 3.6500000000000004

(stats/median-absolute-deviation mpg) ;; => 3.6500000000000004

(stats/median-absolute-deviation mpg (stats/median mpg) :r3) ;; => 3.6000000000000014

(stats/median-absolute-deviation mpg (stats/mean mpg)) ;; => 4.299999999999999

(stats/mean-absolute-deviation mpg) ;; => 4.714453125

(stats/mean-absolute-deviation mpg (stats/median mpg)) ;; => 4.634375

(stats/pooled-mad [setosa-sepal-length virginica-sepal-length]) ;; => 0.4447806655516804

(stats/pooled-mad [setosa-sepal-length virginica-sepal-length] 1.0) ;; => 0.2999999999999998Moments and Shape

Moments and shape statistics describe the form of a dataset’s distribution, particularly its symmetry and peakedness.

momentskewnesskurtosisl-moment

Conventional Moments (moment)

The moment function calculates statistical moments of a dataset. Moments can be central (around the mean), raw (around zero), or around a specified center. They can also be absolute and/or normalized.

k-th Central Moment: \(\mu_k = E[(X - \mu)^k] \approx \frac{1}{n} \sum_{i=1}^{n} (x_i - \bar{x})^k\). Calculated when

centerisnil(default) and:mean?istrue(default).k-th Raw Moment (about origin): \(\mu'_k = E[X^k] \approx \frac{1}{n} \sum_{i=1}^{n} x_i^k\). Calculated if

centeris0.0.k-th Absolute Central Moment: \(E[|X - \mu|^k] \approx \frac{1}{n} \sum_{i=1}^{n} |x_i - \bar{x}|^k\). Calculated if

:absolute?istrue.Normalization: If

:normalize?istrue, the moment is divided by \(\sigma^k\) (where \(\sigma\) is the standard deviation), yielding a scale-invariant measure. For example, the 3rd normalized central moment is related to skewness, and the 4th to kurtosis.Power of sum of differences: If

:mean?isfalse, the function returns the sum \(\sum (x_i - c)^k\) (or sum of absolute values) instead of the mean.

The order parameter specifies \(k\). For example, the 2nd central moment for \(k=2\) is the variance.

(stats/moment mpg 2) ;; => 35.188974609374995

(stats/variance mpg) ;; => 36.32410282258065

(stats/moment mpg 3) ;; => 133.68672198486328

(stats/moment mpg 4 {:normalize? true}) ;; => 2.6272339701791085

(stats/moment mpg 1 {:absolute? true, :center (stats/median mpg)}) ;; => 4.634375Skewness

Skewness measures the asymmetry of a probability distribution about its mean. fastmath.stats/skewness offers several types:

Moment-based (sensitive to outliers):

:G1(Default): Sample skewness based on the 3rd standardized moment, adjusted for sample bias (via Apache Commons Math).

\[G_1 = \frac{n}{(n-1)(n-2)} \sum_{i=1}^n \left(\frac{x_i - \bar{x}}{s}\right)^3\]

:g1or:pearson: Pearson’s moment coefficient of skewness, another bias-adjusted version of the 3rd standardized central moment \(m_3\).

\[g_1 = \frac{m_3}{m_2^{3/2}}\]

:b1: Sample skewness coefficient, related to \(g_1\).

\[b_1 = \frac{m_3}{s^3}\]

:skew: Skewness used in BCa

\[SKEW = \frac{\sum_{i=1}^n (x_i - \bar{x})^3}{(\sum_{i=1}^n (x_i - \bar{x})^2)^{3/2}} = \frac{g_1}{\sqrt{n}}\]

Robust (less sensitive to outliers):

:median: Median Skewness / Pearson’s first skewness coefficient.

\[S_P = 3 \frac{\text{mean} - \text{median}}{\text{stddev}}\]

:mode: Pearson’s second skewness coefficient. Mode estimation method can be specified.

\[S_K = \frac{\text{mean} - \text{mode}}{\text{stddev}}\]

:bowleyor:yule(with \(u=0.25\)): Based on quartiles \(Q_1, Q_2, Q_3\).

\[S_B = \frac{(Q_3 - Q_2) - (Q_2 - Q_1)}{Q_3 - Q_1} = \frac{Q_3 + Q_1 - 2Q_2}{Q_3 - Q_1}\]

:yuleor:B1(Yule’s coefficient): Generalization of Bowley’s, using quantiles \(Q_u, Q_{0.5}, Q_{1-u}\).

\[B_1 = S_Y(u) = \frac{(Q_{1-u} - Q_{0.5}) - (Q_{0.5} - Q_u)}{Q_{1-u} - Q_u}\]

:B3: Robust measure by Groeneveld and Meeden

\[B_3 = \frac{\text{mean} - \text{median}}{\text{mean}(|X_i - \text{median}|)}\]

:hogg: Based on comparing trimmed means (\(U_{0.05}\): mean of top 5%, \(L_{0.05}\): mean of bottom 5%, \(M_{0.25}\): 25% trimmed mean).

\[S_H = \frac{U_{0.05} - M_{0.25}}{M_{0.25} - L_{0.05}}\]

:l-skewness: L-moments based skewness.

\[\tau_3 = \lambda_3 / \lambda_2\]

Positive skewness indicates a tail on the right side of the distribution; negative skewness indicates a tail on the left. Zero indicates symmetry.

(stats/skewness residual-sugar) ;; => 1.0770937564240939

(stats/skewness residual-sugar :G1) ;; => 1.0770937564240939

(stats/skewness residual-sugar :g1) ;; => 1.0767638711454521

(stats/skewness residual-sugar :pearson) ;; => 1.0767638711454521

(stats/skewness residual-sugar :b1) ;; => 1.07643413178962

(stats/skewness residual-sugar :skew) ;; => 0.01538548123095513

(stats/skewness residual-sugar :median) ;; => 0.7046931919610692

(stats/skewness residual-sugar :mode) ;; => 1.0235322790625094

(stats/skewness residual-sugar [:mode :histogram]) ;; => 0.9248159088272246

(stats/skewness residual-sugar [:mode :kde]) ;; => 0.9348108923665123

(stats/skewness residual-sugar :bowley) ;; => 0.1463414634146341

(stats/skewness residual-sugar :yule) ;; => 0.1463414634146341

(stats/skewness residual-sugar [:yule 0.1]) ;; => 0.37499999999999994

(stats/skewness residual-sugar :B3) ;; => 0.2864727410181956

(stats/skewness residual-sugar :hogg) ;; => 3.0343529984131266

(stats/skewness residual-sugar :l-skewness) ;; => 0.22296648073302056Effect of an outlier is visible for moment based skewness, while has no effect when robust method is used.

(stats/skewness (conj residual-sugar -1000)) ;; => -58.659786835804155

(stats/skewness (conj residual-sugar -1000) :l-skewness) ;; => 0.13914390517414776Kurtosis

Kurtosis measures the “tailedness” or “peakedness” of a distribution. High kurtosis means heavy tails (more outliers) and a sharp peak (leptokurtic); low kurtosis means light tails and a flatter peak (platykurtic). fastmath.stats/kurtosis offers several types:

Moment-based (sensitive to outliers):

:G2(Default): Sample kurtosis (Fisher’s definition, not excess), adjusted for sample bias (via Apache Commons Math). For a normal distribution, this is approximately 3.

\[G_2 = \frac{(n+1)n}{(n-1)(n-2)(n-3)} \sum_{i=1}^n \left(\frac{x_i - \bar{x}}{s}\right)^4 - 3\frac{(n-1)^2}{(n-2)(n-3)}\]

:g2or:excess: Sample excess kurtosis. For a normal distribution, this is approximately 0.

\[g_2 = \frac{m_4}{m_2^2}-3\]

:kurt: Kurtosis defined as \(g_2 + 3\).

\[g_{kurt} = \frac{m_4}{m_2^2} = g_2 + 3\]

:b2: Sample kurtosis

\[b_2 = \frac{m_4}{s^4}-3\]

Robust (less sensitive to outliers):

:geary: Geary’s ‘g’ measure of kurtosis. Normal \(\approx \sqrt{2/\pi} \approx 0.798\).

\[g = \frac{MeanAD}{\sigma^2}\]

:moors: Based on octiles \(E_i\) (quantiles \(i/8\)) and centered by subtracting \(1.233\) (Moors’ constant for normality).

\[M_0 = \frac{(E_7-E_5) + (E_3-E_1)}{E_6-E_2}-1.233\]

:crow(Crow-Siddiqui): Based on quantiles \(Q_\alpha, Q_{1-\alpha}, Q_\beta, Q_{1-\beta}\) and centered for normality (\(c\) is based on \(\alpha\) and \(\beta\)). By default \(\alpha=0.025\) and \(\beta=0.25\).

\[CS(\alpha, \beta) = \frac{Q_{1-\alpha} - Q_{\alpha}}{Q_{1-\beta} - Q_{\beta}}-c\]

:hogg: Based on trimmed means \(U_p\) (mean of top \(p\%\)) and \(L_p\) (mean of bottom \(p\%\)) and centered by subtracting \(2.585\). By default \(\alpha=0.005\) and \(\beta=0.5\).

\[K_H(\alpha, \beta) = \frac{U_{\alpha} - L_{\alpha}}{U_{\beta} - L_{\beta}}-2.585\]

:l-kurtosis: L-moments based kurtosis.

\[\tau_4 = \lambda_4 / \lambda_2\]

(stats/kurtosis residual-sugar) ;; => 3.4698201025636317

(stats/kurtosis residual-sugar :G2) ;; => 3.4698201025636317

(stats/kurtosis residual-sugar :g1) ;; => 3.4698201025636317

(stats/kurtosis residual-sugar :excess) ;; => 3.4650542966048463

(stats/kurtosis residual-sugar :kurt) ;; => 6.465054296604846

(stats/kurtosis residual-sugar :b2) ;; => 3.4624146909177034

(stats/kurtosis residual-sugar :geary) ;; => 0.8336299967214688

(stats/kurtosis residual-sugar :moors) ;; => -0.35495121951219544

(stats/kurtosis residual-sugar :crow) ;; => -0.8936518297189449

(stats/kurtosis residual-sugar [:crow 0.05 0.25]) ;; => -0.6581758315571915

(stats/kurtosis residual-sugar :hogg) ;; => -0.5329102382273203

(stats/kurtosis residual-sugar [:hogg 0.025 0.45]) ;; => -0.5505736317691476

(stats/kurtosis residual-sugar :l-kurtosis) ;; => 0.02007386147996773Effect of an outlier is visible for moment based kurtosis, while has no effect when robust method is used.

(stats/kurtosis (conj residual-sugar -1000 1000)) ;; => 2167.8435026710868

(stats/kurtosis (conj residual-sugar -1000 1000) :l-kurtosis) ;; => 0.1447670840462904L-moment

L-moments are summary statistics analogous to conventional moments but are computed from linear combinations of order statistics (sorted data). They are more robust to outliers and provide better estimates for small samples compared to conventional moments.

l-moment vs order: Calculates the L-moment of a specificorder.- \(\lambda_1\): L-location (identical to the mean).

- \(\lambda_2\): L-scale (a measure of dispersion).

- Higher orders relate to shape.

- Trimmed L-moments (TL-moments) can be calculated by specifying

:s(left trim) and:t(right trim) as number of trimmed samples - L-moment Ratios: If

:ratio? true, normalized L-moments are returned.- \(\tau_3 = \lambda_3 / \lambda_2\): Coefficient of L-skewness (same as

(stats/skewness vs :l-skewness)). - \(\tau_4 = \lambda_4 / \lambda_2\): Coefficient of L-kurtosis (same as

(stats/kurtosis vs :l-kurtosis)).

- \(\tau_3 = \lambda_3 / \lambda_2\): Coefficient of L-skewness (same as

L-moments often provide more reliable inferences about the underlying distribution shape, especially when data may contain outliers or come from heavy-tailed distributions.

(stats/l-moment mpg 1) ;; => 20.090624999999996

(stats/mean mpg) ;; => 20.090625

(stats/l-moment mpg 2) ;; => 3.3980846774193565

(stats/l-moment mpg 3) ;; => 0.534375

(stats/l-moment residual-sugar 3) ;; => 0.6185370660798765

(stats/l-moment residual-sugar 3 {:s 10}) ;; => 0.3017960335908669

(stats/l-moment residual-sugar 3 {:t 10}) ;; => 0.029252613288362147

(stats/l-moment residual-sugar 3 {:s 10, :t 10}) ;; => 0.003909359312292547Relation to skewness and kurtosis

(stats/l-moment residual-sugar 3 {:ratio? true}) ;; => 0.22296648073302056

(stats/skewness residual-sugar :l-skewness) ;; => 0.22296648073302056

(stats/l-moment residual-sugar 4 {:ratio? true}) ;; => 0.02007386147996773

(stats/kurtosis residual-sugar :l-kurtosis) ;; => 0.02007386147996773Intervals and Extents

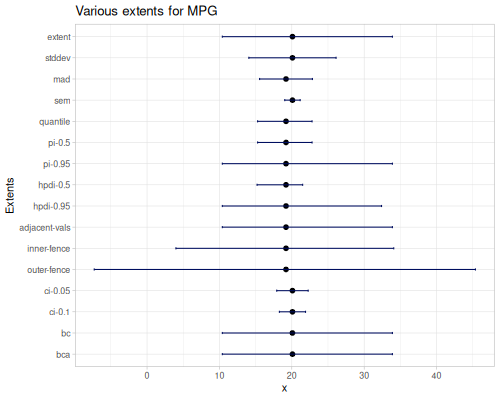

This section provides functions to describe the spread or define specific ranges and intervals within a dataset.

span,iqrextent,stddev-extent,mad-extent,sem-extentpercentile-extent,quantile-extentpi,pi-extenthpdi-extentadjacent-valuesinner-fence-extent,outer-fence-extentpercentile-bc-extent,percentile-bca-extentci

Basic Range: Functions like

span(\(max - min\)) andextent(providing \([min, max]\) and optionally the mean) offer simple measures of the total spread of the data.Interquartile Range:

iqr(\(Q_3 - Q_1\)) specifically measures the spread of the middle 50% of the data, providing a robust alternative to the total range.Symmetric Spread Intervals: Functions ending in

-extentsuch asstddev-extent,mad-extent, andsem-extentdefine intervals typically centered around the mean or median. They represent a range defined by adding/subtracting a multiple (usually 1) of a measure of dispersion (Standard Deviation, Median Absolute Deviation, or Standard Error of the Mean) from the central point.Quantile-Based Intervals:

percentile-extent,quantile-extent,pi,pi-extent, andhpdi-extentdefine intervals based on quantiles or percentiles of the data. These functions capture specific ranges containing a certain percentage of the data points (e.g., the middle 95% defined by quantiles 0.025 and 0.975).hpdi-extentcalculates the shortest interval containing a given proportion of data, based on empirical density.Box Plot Boundaries:

adjacent-values(LAV, UAV) and fence functions (inner-fence-extent,outer-fence-extent) calculate specific bounds based on quartiles and multiples of the IQR. These are primarily used in box plot visualization and as a conventional method for identifying potential outliers.Confidence and Prediction Intervals:

ci,percentile-bc-extent, andpercentile-bca-extentprovide inferential intervals.ciestimates a confidence interval for the population mean using the t-distribution.percentile-bc-extentandpercentile-bca-extent(Bias-Corrected and Bias-Corrected Accelerated) are advanced bootstrap methods for estimating confidence intervals for statistics, offering robustness against non-normality and bias.

Note that:

- \(IQR = Q_3-Q_1\)

- \(LIF=Q_1-1.5 \times IQR\)

- \(UIF=Q_3+1.5 \times IQR\)

- \(LOF=Q_1-3\times IQR\)

- \(UOF=Q_3+3\times IQR\)

- \(CI=\bar{x} \pm t_{\alpha/2, n-1} \frac{s}{\sqrt{n}}\)

| Function | Returned value |

|---|---|

span |

\(max-min\) |

iqr |

\(Q_3-Q_1\) |

extent |

[min, max, mean] or [min, max] (when :mean? is false) |

stddev-extent |

[mean - stddev, mean + stddev, mean] |

mad-extent |

[median - mad, median + mad, median] |

sem-extent |

[mean - sem, mean + sem, mean] |

percentile-extent |

[p1-val, p2-val, median] with default p1=25 and p2=75 |

quantile-extent |

[q1-val, q2-val, median] with default q1=0.25 and q2=0.75 |

pi |

{p1 p1-val p2 p2-val} defined by size=p2-p1 |

pi-extent |

[p1-val, p2-val, median] defined by size=p2-p1 |

hdpi-extent |

[p1-val, p2-val, median] defined by size=p2-p1 |

adjacent-values |

[LAV, UAV, median] |

inner-fence-extent |

[LIF, UIF, median] |

outer-fence-extent |

[LOF, UOF, median] |

ci |

[lower upper mean] |

percentile-bc-extent |

[lower upper mean] |

percentile-bca-extent |

[lower upper mean] |

(stats/span mpg) ;; => 23.5

(stats/iqr mpg) ;; => 7.525000000000002

(stats/extent mpg) ;; => #vec3 [10.4, 33.9, 20.090625]

(stats/extent mpg false) ;; => #vec2 [10.4, 33.9]

(stats/stddev-extent mpg) ;; => [14.063676947910896 26.117573052089103 20.090625]

(stats/mad-extent mpg) ;; => [15.549999999999999 22.85 19.2]

(stats/sem-extent mpg) ;; => [19.025201040627184 21.156048959372814 20.090625]

(stats/percentile-extent mpg) ;; => [15.274999999999999 22.8 19.2]

(stats/percentile-extent mpg 2.5 97.5) ;; => [10.4 33.9 19.2]

(stats/percentile-extent mpg 2.5 97.5 :r9) ;; => [10.4 33.628125 19.2]

(stats/quantile-extent mpg) ;; => [15.274999999999999 22.8 19.2]

(stats/quantile-extent mpg 0.025 0.975) ;; => [10.4 33.9 19.2]

(stats/quantile-extent mpg 0.025 0.975 :r9) ;; => [10.4 33.628125 19.2]

(stats/pi mpg 0.95) ;; => {2.5 10.4, 97.5 33.9}

(stats/pi-extent mpg 0.95) ;; => [10.4 33.9 19.2]

(stats/hpdi-extent mpg 0.95) ;; => [10.4 32.4 19.2]

(stats/adjacent-values mpg) ;; => [10.4 33.9 19.2]

(stats/inner-fence-extent mpg) ;; => [3.9874999999999954 34.087500000000006 19.2]

(stats/outer-fence-extent mpg) ;; => [-7.300000000000008 45.37500000000001 19.2]

(stats/ci mpg) ;; => [17.917678508746246 22.263571491253753 20.090625]

(stats/ci mpg 0.1) ;; => [18.284178665508097 21.8970713344919 20.090625]

(stats/percentile-bc-extent mpg) ;; => [10.4 33.9 20.090625]

(stats/percentile-bc-extent mpg 10.0) ;; => [14.85121570396848 32.66635783408668 20.090625]

(stats/percentile-bca-extent mpg) ;; => [10.4 33.9 20.090625]

(stats/percentile-bca-extent mpg 10.0) ;; => [14.79162798537463 32.44980004741413 20.090625]

Outlier Detection

Outlier detection involves identifying data points that are significantly different from other observations. Outliers can distort statistical analyses and require careful handling. fastmath.stats provides functions to find and optionally remove such values based on the Interquartile Range (IQR) method.

outliers

outliers function use the inner fence rule based on the IQR and returns a sequence containing only the data points identified as outliers.

- Lower Inner Fence (LIF): \(Q_1 - 1.5 \times IQR\)

- Upper Inner Fence (UIF): \(Q_3 + 1.5 \times IQR\)

Where \(Q_1\) is the first quartile (25th percentile) and \(Q_3\) is the third quartile (75th percentile). Points falling below the LIF or above the UIF are considered outliers.

Function accepts an optional estimation-strategy keyword (see [quantile]) to control how quartiles are calculated, which affects the fence boundaries.

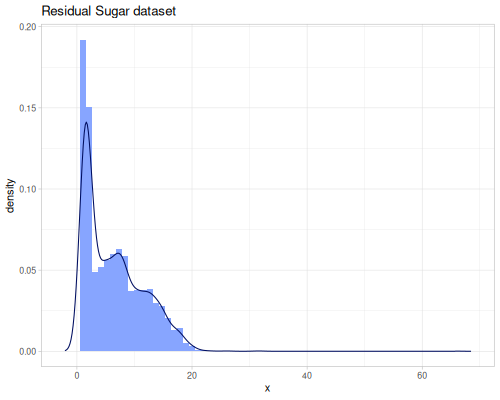

Let’s find the outliers in the residual-sugar data.

(stats/outliers residual-sugar)(23.5 31.6 31.6 65.8 26.05 26.05 22.6)Data Transformation

Functions to modify data (scaling, normalizing, transforming).

standardize,robust-standardize,demeanrescaleremove-outlierstrim,trim-lower,trim-upper,winsorbox-cox-infer-lambda,box-cox-transformationyeo-johnson-infer-lambda,yeo-johnson-transformation

Data transformations are often necessary preprocessing steps in statistical analysis and machine learning. They can help meet the assumptions of certain models (e.g., normality, constant variance), improve interpretability, or reduce the influence of outliers. fastmath.stats offers several functions for these purposes, broadly categorized into linear scaling/centering, outlier handling, and power transformations for normality.

Let’s demonstrate some of these transformations using the residual-sugar data from the wine quality dataset.

(stats/mean residual-sugar) ;; => 6.391414863209474

(stats/stddev residual-sugar) ;; => 5.072057784014863

(stats/median residual-sugar) ;; => 5.2

(stats/mad residual-sugar) ;; => 3.6

(stats/extent residual-sugar false) ;; => #vec2 [0.6, 65.8]

(count residual-sugar) ;; => 4898

Linear Transformations: standardize, robust-standardize, demean, and rescale linearly transform data, preserving its shape but changing its location and/or scale.

demeancenters the data by subtracting the mean, resulting in a dataset with a mean of zero.standardizescales the demeaned data by dividing by the standard deviation, resulting in data with mean zero and standard deviation one (z-score normalization). This makes the scale of different features comparable.robust-standardizeprovides a version less sensitive to outliers by centering around the median and scaling by the Median Absolute Deviation (MAD) or a quantile range (like the IQR).rescalelinearly maps the data to a specific target range (e.g., [0, 1]), useful for algorithms sensitive to input scale.

(def residual-sugar-demeaned (-> residual-sugar stats/demean))(def residual-sugar-standardized (-> residual-sugar stats/standardize))(def residual-sugar-robust-standardized (-> residual-sugar stats/robust-standardize))(def residual-sugar-rescaled (-> residual-sugar stats/rescale))(stats/mean residual-sugar-demeaned) ;; => -3.2386416038881656E-16

(stats/stddev residual-sugar-demeaned) ;; => 5.072057784014863

(stats/median residual-sugar-demeaned) ;; => -1.1914148632094737

(stats/mad residual-sugar-demeaned) ;; => 3.5999999999999996

(stats/extent residual-sugar-demeaned false) ;; => #vec2 [-5.791414863209474, 59.40858513679052]

(stats/mean residual-sugar-standardized) ;; => -1.5386267642212256E-16

(stats/stddev residual-sugar-standardized) ;; => 1.0000000000000004

(stats/median residual-sugar-standardized) ;; => -0.23489773065368974

(stats/mad residual-sugar-standardized) ;; => 0.7097710935679375

(stats/extent residual-sugar-standardized false) ;; => #vec2 [-1.1418274613238324, 11.712915677739927]

(stats/mean residual-sugar-robust-standardized) ;; => 0.3309485731137426

(stats/stddev residual-sugar-robust-standardized) ;; => 1.4089049400041327

(stats/median residual-sugar-robust-standardized) ;; => 0.0

(stats/mad residual-sugar-robust-standardized) ;; => 1.0

(stats/extent residual-sugar-robust-standardized false) ;; => #vec2 [-1.277777777777778, 16.833333333333332]

(stats/mean residual-sugar-rescaled) ;; => 0.0888253813375686

(stats/stddev residual-sugar-rescaled) ;; => 0.07779229730084207

(stats/median residual-sugar-rescaled) ;; => 0.07055214723926381

(stats/mad residual-sugar-rescaled) ;; => 0.055214723926380375

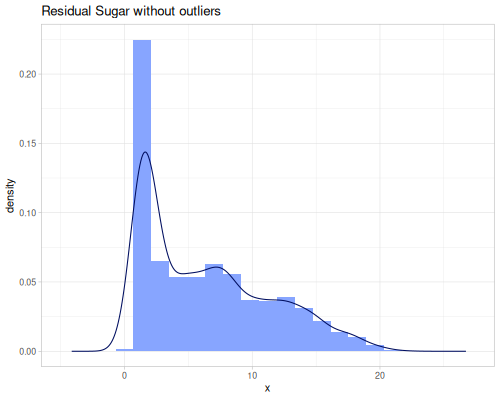

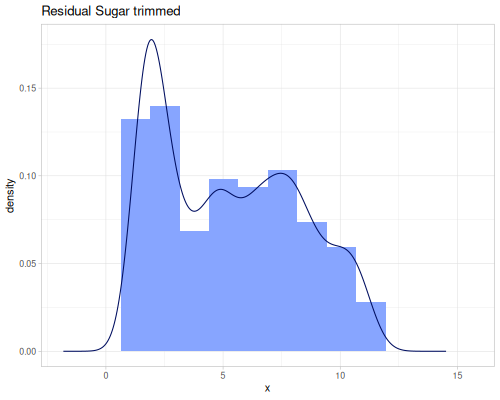

(stats/extent residual-sugar-rescaled false) ;; => #vec2 [0.0, 1.0]Outlier Handling: remove-outliers, trim, trim-lower, trim-upper, and winsor address outliers based on quantile fences.

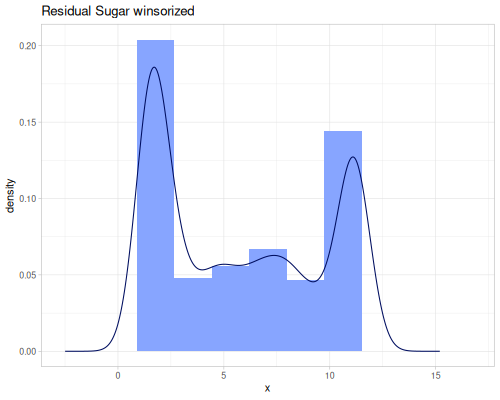

remove-outliersreturns a sequence containing the data points from the original sequence excluding those identified as outliers.trimremoves values outside a specified quantile range (defaulting to 0.2 quantile, removing the bottom and top 20%).trim-lowerandtrim-upperremove only below or above a single quantile.winsorcaps values outside a quantile range to the boundary values instead of removing them. This retains the sample size but reduces the influence of extreme values.

(def residual-sugar-no-outliers (stats/remove-outliers residual-sugar))(def residual-sugar-trimmed (stats/trim residual-sugar))(def residual-sugar-winsorized (stats/winsor residual-sugar))(stats/mean residual-sugar-no-outliers) ;; => 6.354109589041096

(stats/stddev residual-sugar-no-outliers) ;; => 4.950545246813552

(stats/median residual-sugar-no-outliers) ;; => 5.2

(stats/mad residual-sugar-no-outliers) ;; => 3.6

(stats/extent residual-sugar-no-outliers false) ;; => #vec2 [0.6, 22.0]

(count residual-sugar-no-outliers) ;; => 4891

(stats/mean residual-sugar-trimmed) ;; => 5.27940414507772

(stats/stddev residual-sugar-trimmed) ;; => 2.9594599192772546

(stats/median residual-sugar-trimmed) ;; => 5.0

(stats/mad residual-sugar-trimmed) ;; => 2.7

(stats/extent residual-sugar-trimmed false) ;; => #vec2 [1.5, 11.2]

(count residual-sugar-trimmed) ;; => 3088

(stats/mean residual-sugar-winsorized) ;; => 5.789893834218048

(stats/stddev residual-sugar-winsorized) ;; => 3.8241868005472104

(stats/median residual-sugar-winsorized) ;; => 5.2

(stats/mad residual-sugar-winsorized) ;; => 3.6

(stats/quantiles residual-sugar [0.2 0.8]) ;; => [1.5 11.2]

(stats/extent residual-sugar-winsorized false) ;; => #vec2 [1.5, 11.2]

(count residual-sugar-winsorized) ;; => 4898 |

|

|

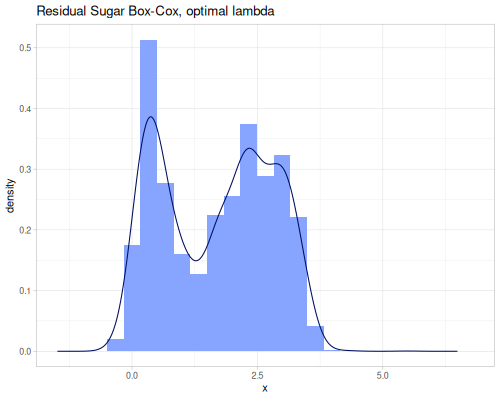

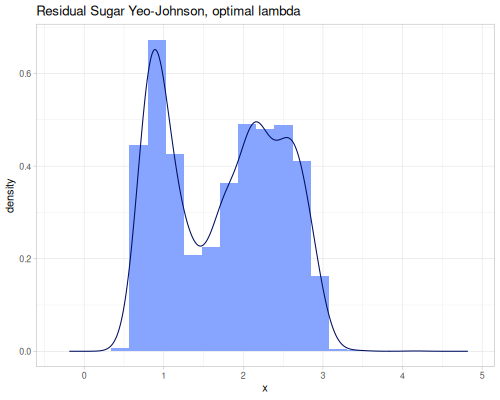

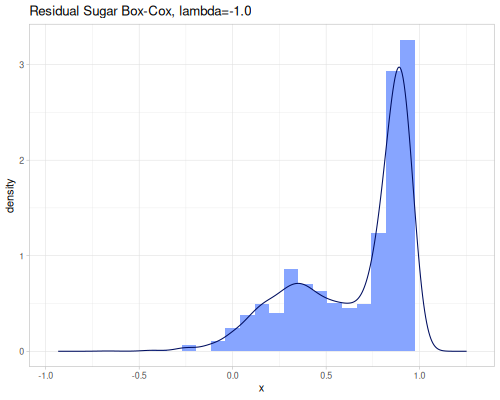

Power Transformations: box-cox-transformation and yeo-johnson-transformation (and their infer-lambda counterparts) are non-linear transformations that can change the shape of the distribution to be more symmetric or normally distributed. They are particularly useful for data that is skewed or violates assumptions of linear models. Both are invertable.

box-cox-transformationworks in general for strictly positive data. It includes the log transformation as a special case (when lambda is \(0.0\)) and generalizes square root, reciprocal, and other power transformations.box-cox-infer-lambdahelps find the optimal lambda parameter. Optional parameters::negative?(default:false), when set totruespecific transformation is performed to keep information about sign.:scaled?(default:false), when set totrue, scale data by geometric mean, when is a number, this number is used as a scale.

\[y_{BC}^{(\lambda)}=\begin{cases} \frac{y^\lambda-1}{\lambda} & \lambda\neq 0 \\ \log(y) & \lambda = 0 \end{cases}\]

Scaled version, with default scale set to geometric mean (GM):

\[y_{BC}^{(\lambda, s)}=\begin{cases} \frac{y^\lambda-1}{\lambda s^{\lambda - 1}} & \lambda\neq 0 \\ s\log(y) & \lambda = 0 \end{cases}\]

When :negative? is set to true, formula takes the following form:

\[y_{BCneg}^{(\lambda)}=\begin{cases} \frac{\operatorname{sgn}(y)|y|^\lambda-1}{\lambda} & \lambda\neq 0 \\ \operatorname{sgn}(y)\log(|y|+1) & \lambda = 0 \end{cases}\]

yeo-johnson-transformationextends Box-Cox to handle zero and negative values.yeo-johnson-infer-lambdafinds the optimal lambda for this transformation.

\[y_{YJ}^{(\lambda)}=\begin{cases} \frac{(y+1)^\lambda - 1}{\lambda} & \lambda \neq 0, y\geq 0 \\ \log(y+1) & \lambda = 0, y\geq 0 \\ \frac{(1-y)^{2-\lambda} - 1}{\lambda - 2} & \lambda \neq 2, y\geq 0 \\ -\log(1-y) & \lambda = 2, y\geq 0 \end{cases}\]

Both fuctions accept additional parameters:

:alpha(dafault:0.0): perform dataset shift by value of the:alphabefore transformation.:inversed?(default:false): perform inverse transformation for givenlambda.

When lambda is set to nil optimal lambda will be calculated (only when :inversed? is false).

(stats/box-cox-transformation [0 1 10] 0.0) ;; => (##-Inf 0.0 2.302585092994046)

(stats/box-cox-transformation [0 1 10] 2.0) ;; => (-0.5 0.0 49.5)

(stats/box-cox-transformation [0 1 10] -2.0 {:alpha 2}) ;; => (0.375 0.4444444444444444 0.4965277777777778)

(stats/box-cox-transformation [0.375 0.444 0.497] -2.0 {:alpha 2, :inverse? true}) ;; => (0.0 0.9880715233359845 10.90994448735805)

(stats/box-cox-transformation [0 1 10] nil {:alpha 1}) ;; => (-0.0 0.5989997131047903 1.493287747539177)

(stats/box-cox-transformation [0 1 10] nil {:scaled? true, :alpha 1}) ;; => (-0.0 2.619403814271841 6.530092646346955)

(stats/box-cox-transformation [0 1 10] nil {:alpha -5, :negative? true}) ;; => (-42.0 -21.666666666666668 41.333333333333336)

(stats/box-cox-transformation [0 1 10] 2.0 {:alpha -5, :negative? true, :scaled? 2}) ;; => (-6.5 -4.25 6.0)

(stats/box-cox-transformation [-6.5 -4.25 6.0] 2.0 {:alpha -5, :negative? true, :scaled? 2, :inverse? true}) ;; => (0.0 1.0 10.0)

(stats/yeo-johnson-transformation [0 1 10]) ;; => (-0.0 0.5989997131047903 1.493287747539177)

(stats/yeo-johnson-transformation [0 1 10] 0.0) ;; => (0.0 0.6931471805599453 2.3978952727983707)

(stats/yeo-johnson-transformation [0 1 10] 2.0 {:alpha -5}) ;; => (-1.791759469228055 -1.6094379124341003 17.5)

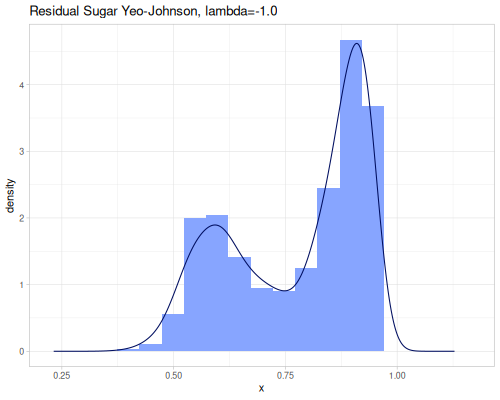

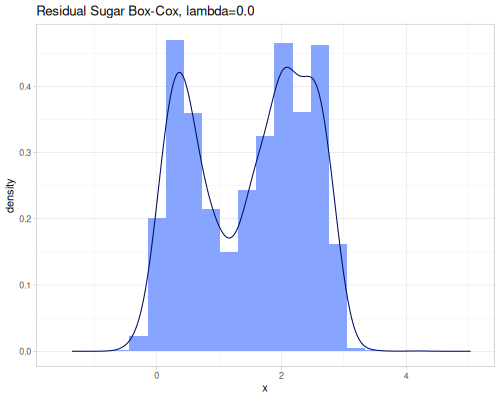

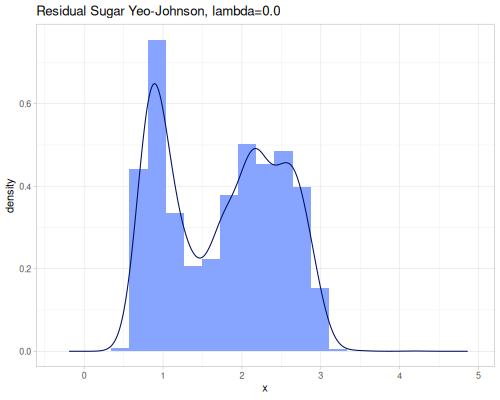

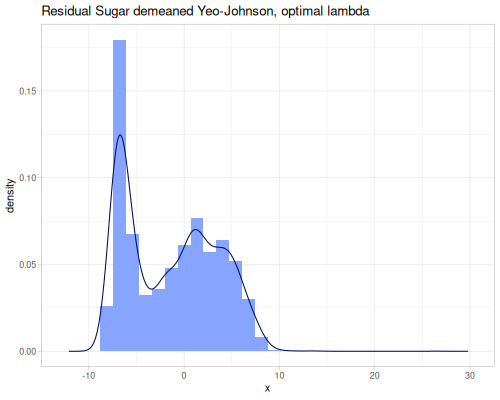

(stats/yeo-johnson-transformation [-1.79 -1.61 17.5] 2.0 {:alpha -5, :inverse? true}) ;; => (0.010547533616885651 0.9971887721664103 10.0)Let’s illustrate how real data look after transformation. We’ll start with finding an optimal lambda parameter for both transformations.

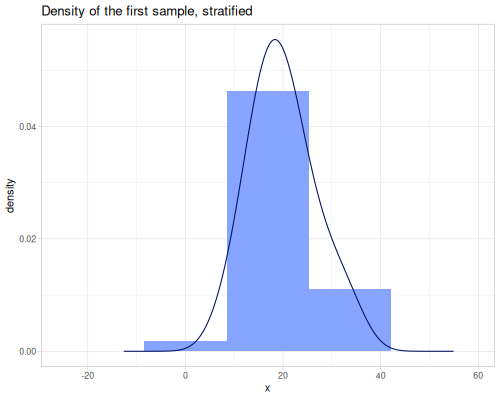

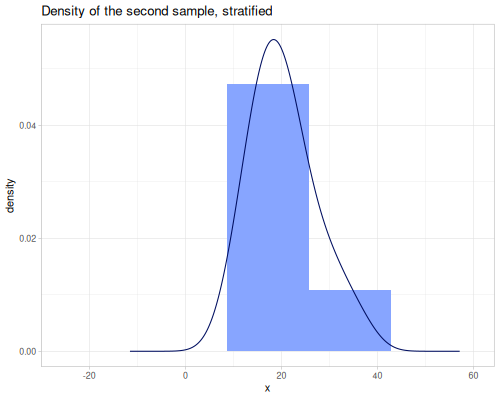

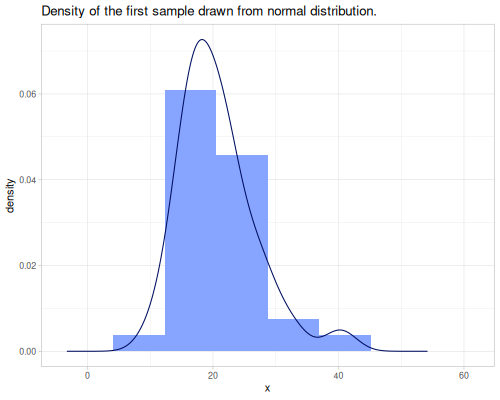

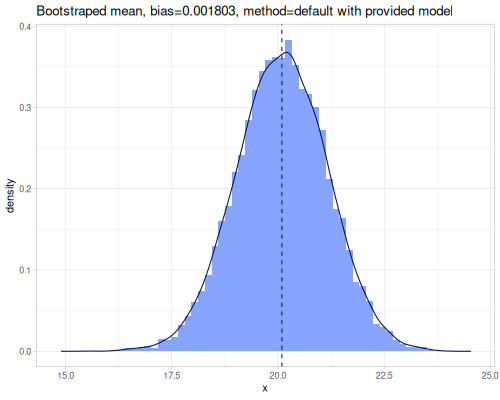

(stats/box-cox-infer-lambda residual-sugar)0.12450565747077313(stats/yeo-johnson-infer-lambda residual-sugar)-0.004232775028107413(def residual-sugar-box-cox (stats/box-cox-transformation residual-sugar nil))(def residual-sugar-yeo-johnson (stats/yeo-johnson-transformation residual-sugar nil))(stats/mean residual-sugar-box-cox) ;; => 1.6895596029306466

(stats/stddev residual-sugar-box-cox) ;; => 1.1032589652895741

(stats/median residual-sugar-box-cox) ;; => 1.83006349249072

(stats/mad residual-sugar-box-cox) ;; => 1.0527777588806666

(stats/extent residual-sugar-box-cox false) ;; => #vec2 [-0.4949201739139414, 5.494888687185149]

(stats/mean residual-sugar-yeo-johnson) ;; => 1.7445922710465025

(stats/stddev residual-sugar-yeo-johnson) ;; => 0.7180340465417893

(stats/median residual-sugar-yeo-johnson) ;; => 1.8175219821484885

(stats/mad residual-sugar-yeo-johnson) ;; => 0.6888246769156978

(stats/extent residual-sugar-yeo-johnson false) ;; => #vec2 [0.46953642189900135, 4.164560241279393] |

|

|

|

|

|

As you can see, the Yeo-Johnson transformation with the inferred lambda has made the residual-sugar distribution appear more symmetric and perhaps closer to a normal distribution shape.

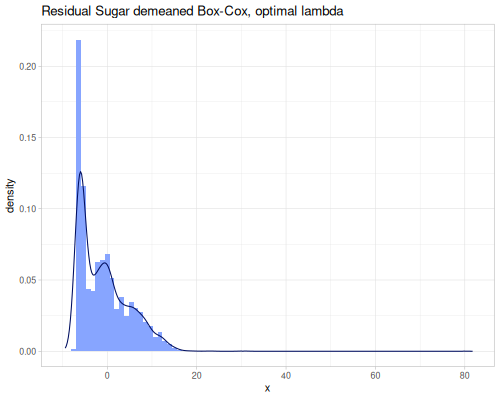

Both power transformation can work on negative data as well. When Box-Cox is used, :negative? option should be set to true.

(stats/box-cox-infer-lambda residual-sugar

nil {:alpha (- (stats/mean residual-sugar)) :negative? true})1.0967709597378346(stats/yeo-johnson-infer-lambda residual-sugar nil {:alpha (- (stats/mean residual-sugar))})0.7290829398083033(def residual-sugar-box-cox-demeaned

(stats/box-cox-transformation

residual-sugar nil {:alpha (- (stats/mean residual-sugar)) :negative? true}))(def residual-sugar-yeo-johnson-demeaned (stats/yeo-johnson-transformation

residual-sugar nil

{:alpha (- (stats/mean residual-sugar))}))(stats/mean residual-sugar-box-cox-demeaned) ;; => -0.817408751945805

(stats/stddev residual-sugar-box-cox-demeaned) ;; => 5.608979218510315

(stats/median residual-sugar-box-cox-demeaned) ;; => -2.0166286943919243

(stats/mad residual-sugar-box-cox-demeaned) ;; => 3.9790383049427582

(stats/extent residual-sugar-box-cox-demeaned false) ;; => #vec2 [-7.1704674516316125, 79.51310067615422]

(stats/mean residual-sugar-yeo-johnson-demeaned) ;; => -1.3556628530573829

(stats/stddev residual-sugar-yeo-johnson-demeaned) ;; => 4.783153826599674

(stats/median residual-sugar-yeo-johnson-demeaned) ;; => -1.3457965294079925

(stats/mad residual-sugar-yeo-johnson-demeaned) ;; => 4.669926884717297

(stats/extent residual-sugar-yeo-johnson-demeaned false) ;; => #vec2 [-8.192317681811963, 25.905106824581896] |

|

Correlation and Covariance

Measures of the relationship between two or more variables.

covariance,correlationpearson-correlation,spearman-correlation,kendall-correlationcoefficient-matrix,correlation-matrix,covariance-matrix

Covariance vs. Correlation:

covariancemeasures the extent to which two variables change together. A positive covariance means they tend to increase or decrease simultaneously. A negative covariance means one tends to increase when the other decreases. A covariance near zero suggests no linear relationship. The magnitude of covariance depends on the scales of the variables, making it difficult to compare covariances between different pairs of variables. The sample covariance between two sequences \(X = \{x_1, \dots, x_n\}\) and \(Y = \{y_1, \dots, y_n\}\) is calculated as:

\[ \text{Cov}(X, Y) = \frac{1}{n-1} \sum_{i=1}^n (x_i - \bar{x})(y_i - \bar{y}) \]

where \(\bar{x}\) and \(\bar{y}\) are the sample means.

correlationstandardizes the covariance, resulting in a unitless measure that ranges from -1 to +1. It indicates both the direction and strength of a relationship. A correlation of +1 indicates a perfect positive relationship, -1 a perfect negative relationship, and 0 no linear relationship. Thecorrelationfunction infastmath.statsdefaults to computing the Pearson correlation coefficient.

Types of Correlation:

pearson-correlation: The most common correlation coefficient, also known as the Pearson product-moment correlation coefficient (\(r\)). It measures the strength and direction of a linear relationship between two continuous variables. It assumes the variables are approximately normally distributed and that the relationship is linear. It is sensitive to outliers. The formula for the sample Pearson correlation coefficient is:

\[r = \frac{\text{Cov}(X, Y)}{s_x s_y} = \frac{\sum_{i=1}^n (x_i - \bar{x})(y_i - \bar{y})}{\sqrt{\sum_{i=1}^n (x_i - \bar{x})^2 \sum_{i=1}^n (y_i - \bar{y})^2}}\]

where \(s_x\) and \(s_y\) are the sample standard deviations.

spearman-correlation: Spearman’s rank correlation coefficient (\(\rho\)) is a non-parametric measure of the strength and direction of a monotonic relationship between two variables. A monotonic relationship is one that is either consistently increasing or consistently decreasing, but not necessarily linear. Spearman’s correlation is calculated by applying the Pearson formula to the ranks of the data values rather than the values themselves. This makes it less sensitive to outliers than Pearson correlation and suitable for ordinal data or when the relationship is monotonic but non-linear.kendall-correlation: Kendall’s Tau rank correlation coefficient (\(\tau\)) is another non-parametric measure of the strength and direction of a monotonic relationship. It is based on the number of concordant and discordant pairs of observations. A pair of data points is concordant if their values move in the same direction (both increase or both decrease) relative to each other, and discordant if they move in opposite directions. Kendall’s Tau is generally preferred over Spearman’s Rho for smaller sample sizes or when there are many tied ranks. One common formulation, Kendall’s Tau-a, is:

\[\tau_A = \frac{N_c - N_d}{n(n-1)/2}\]

where \(N_c\) is the number of concordant pairs and \(N_d\) is the number of discordant pairs.

Comparison of Correlation Methods:

- Use Pearson for measuring linear relationships between continuous, normally distributed variables.

- Use Spearman or Kendall for measuring monotonic relationships (linear or non-linear) between variables, especially when data is not normally distributed, contains outliers, or is ordinal. Kendall is often more robust with ties and smaller samples.

Matrix Functions for Multiple Variables:

coefficient-matrix: A generic function that computes a specified pairwise measure (defined by a function passed as an argument) between all pairs of sequences in a collection. Useful for generating matrices of custom similarity, distance, or correlation measures.covariance-matrix: A specialization that computes the pairwisecovariancefor all sequences in a collection. The output is a symmetric matrix where the element at rowi, columnjis the covariance between sequenceiand sequencej. The diagonal elements are the variances of the individual sequences.correlation-matrix: A specialization that computes the pairwisecorrelation(Pearson by default, or specified via keyword like:spearmanor:kendall) for all sequences in a collection. The output is a symmetric matrix where the element at rowi, columnjis the correlation between sequenceiand sequencej. The diagonal elements are always 1.0 (a variable is perfectly correlated with itself).

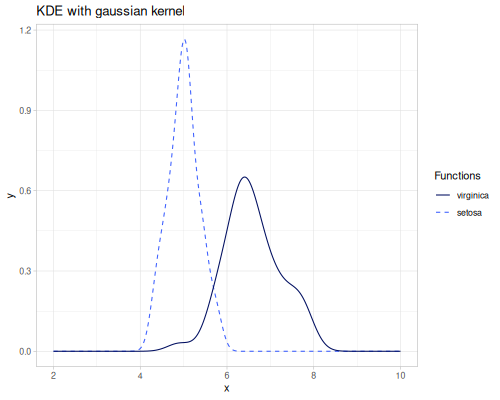

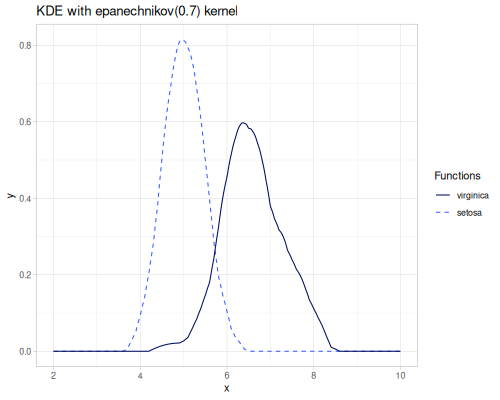

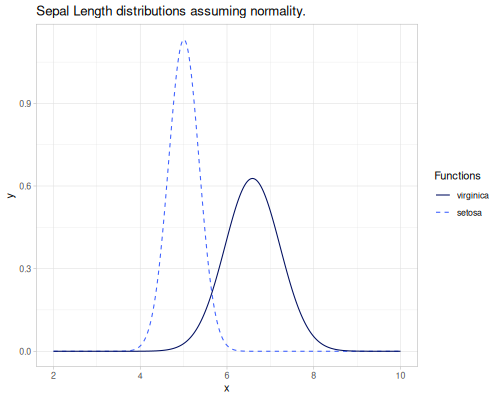

Let’s examine the correlations between the numerical features in the iris dataset.

(stats/covariance virginica-sepal-length setosa-sepal-length) ;; => 0.03007346938775512

(stats/correlation virginica-sepal-length setosa-sepal-length) ;; => 0.13417210385493564

(stats/pearson-correlation virginica-sepal-length setosa-sepal-length) ;; => 0.1341721038549354

(stats/spearman-correlation virginica-sepal-length setosa-sepal-length) ;; => 0.038837958926489176

(stats/kendall-correlation virginica-sepal-length setosa-sepal-length) ;; => 0.030531668042830747To generate matrices we’ll use three sepal lengths samples. The last two examples use custom measure function: Euclidean distance between samples and Glass’ delta.

(stats/covariance-matrix (vals sepal-lengths)) ;; => ([0.12424897959183674 -0.014710204081632658 0.03007346938775512] [-0.014710204081632658 0.2664326530612246 -0.04649795918367346] [0.03007346938775512 -0.04649795918367346 0.4043428571428573])

(stats/correlation-matrix (vals sepal-lengths)) ;; => ([1.0 -0.08084972701756978 0.13417210385493492] [-0.08084972701756978 0.9999999999999999 -0.14166588513698952] [0.13417210385493492 -0.14166588513698952 1.0])

(stats/correlation-matrix (vals sepal-lengths) :kendall) ;; => ([1.0 -0.06357129445203882 0.030531668042830747] [-0.06357129445203882 1.0 -0.10454171307909799] [0.030531668042830747 -0.10454171307909799 1.0])

(stats/correlation-matrix (vals sepal-lengths) :spearman) ;; => ([1.0 -0.10163684956357029 0.03883795892648921] [-0.10163684956357029 1.0 -0.14067854670792204] [0.03883795892648921 -0.14067854670792204 1.0])

(stats/coefficient-matrix (vals sepal-lengths) stats/L2 true) ;; => ([0.0 7.989367934949548 12.16922347563722] [7.989367934949548 0.0 7.660287200882224] [12.16922347563722 7.660287200882224 0.0])

(stats/coefficient-matrix (vals sepal-lengths) stats/glass-delta) ;; => ([0.0 -1.8017279836157427 -2.4878923883271122] [2.6383750609896146 0.0 -1.0253513509413892] [4.488074566113517 1.263146930448887 0.0])Distance and Similarity Metrics

Measures of distance, error, or similarity between sequences or distributions.

me,mae,maperss,mse,rmser2count=,L0,L1,L2sq,L2,LInfpsnrdissimilarity,similarity

Distance metrics quantify how far apart or different two data sequences or probability distributions are. Similarity metrics, conversely, measure how close or alike they are, often being the inverse or a transformation of a distance. fastmath.stats provides a range of these measures suitable for comparing numerical sequences, observed counts (histograms), or theoretical probability distributions.

Error Metrics

These functions typically quantify the difference between an observed sequence and a predicted or reference sequence, focusing on the magnitude of errors. All can accept a constant as a second argument.

me(Mean Error): The average of the differences between corresponding elements. \[ ME = \frac{1}{n} \sum_{i=1}^n (x_i - y_i) \]mae(Mean Absolute Error): The average of the absolute differences. More robust to outliers than squared error. \[ MAE = \frac{1}{n} \sum_{i=1}^n |x_i - y_i| \]mape(Mean Absolute Percentage Error): The average of the absolute percentage errors. Useful for relative error assessment, but undefined if the reference value \(x_i\) is zero. \[ MAPE = \frac{1}{n} \sum_{i=1}^n \left| \frac{x_i - y_i}{x_i} \right| \times 100\% \]rss(Residual Sum of Squares): The sum of the squared differences. Used in least squares regression. \[ RSS = \sum_{i=1}^n (x_i - y_i)^2 \]mse(Mean Squared Error): The average of the squared differences. Penalizes larger errors more heavily. \[ MSE = \frac{1}{n} \sum_{i=1}^n (x_i - y_i)^2 \]rmse(Root Mean Squared Error): The square root of the MSE. Has the same units as the original data. \[ RMSE = \sqrt{\frac{1}{n} \sum_{i=1}^n (x_i - y_i)^2} \]r2(Coefficient of Determination): Measures the proportion of the variance in the dependent variable that is predictable from the independent variable(s). Calculated as \(1 - (RSS / TSS)\), where TSS is the Total Sum of Squares. It ranges from 0 to 1 for linear regression. \[ R^2 = 1 - \frac{\sum (x_i - y_i)^2}{\sum (x_i - \bar{x})^2} \]- adjusted

r2: A modified version of \(R^2\) that has been adjusted for the number of predictors in the model. It increases only if the new term improves the model more than would be expected by chance. \[ R^2_{adj} = 1 - (1 - R^2) \frac{n-1}{n-p-1} \]

Let’s use setosa-sepal-length as observed and virginica-sepal-length as predicted (though they are independent samples, not predictions) to illustrate error measures.

(stats/me setosa-sepal-length virginica-sepal-length) ;; => -1.582

(stats/mae setosa-sepal-length virginica-sepal-length) ;; => 1.582

(stats/mape setosa-sepal-length virginica-sepal-length) ;; => 0.3211321155719454

(stats/rss setosa-sepal-length virginica-sepal-length) ;; => 148.09000000000006

(stats/mse setosa-sepal-length virginica-sepal-length) ;; => 2.9618

(stats/rmse setosa-sepal-length virginica-sepal-length) ;; => 1.720988088279521

(stats/r2 setosa-sepal-length virginica-sepal-length) ;; => -23.324102361946068

(stats/r2 setosa-sepal-length virginica-sepal-length 2) ;; => -24.359170547560794

(stats/r2 setosa-sepal-length virginica-sepal-length 5) ;; => -26.0882049030763Also we can compare an observed sequence to a constant value. For example to a mean of the virginica sepal length.

(def vsl-mean (stats/mean virginica-sepal-length))(stats/me setosa-sepal-length vsl-mean) ;; => -1.582

(stats/mae setosa-sepal-length vsl-mean) ;; => 1.582

(stats/mape setosa-sepal-length vsl-mean) ;; => 0.32244506843926823

(stats/rss setosa-sepal-length vsl-mean) ;; => 131.2244000000001

(stats/mse setosa-sepal-length vsl-mean) ;; => 2.6244880000000004

(stats/rmse setosa-sepal-length vsl-mean) ;; => 1.6200271602661482

(stats/r2 setosa-sepal-length vsl-mean) ;; => -20.55389113366842

(stats/r2 setosa-sepal-length vsl-mean 2) ;; => -21.471077990420266

(stats/r2 setosa-sepal-length vsl-mean 5) ;; => -23.003196944312556Distance Metrics (L-p Norms and others)

These functions represent common distance measures, often related to L-p norms between vectors (sequences).

count=,L0: Counts the number of elements that are equal in both sequences. While related to the L0 “norm” (which counts non-zero elements), this implementation counts equal elements after subtraction. \[ Count= = \sum_{i=1}^n \mathbb{I}(x_i = y_i) \]L1(Manhattan/City Block Distance): The sum of the absolute differences. \[ L_1 = \sum_{i=1}^n |x_i - y_i| \]L2sq(Squared Euclidean Distance): The sum of the squared differences. Equivalent torss. \[ L_2^2 = \sum_{i=1}^n (x_i - y_i)^2 \]L2(Euclidean Distance): The square root of the sum of the squared differences. The most common distance metric. \[ L_2 = \sqrt{\sum_{i=1}^n (x_i - y_i)^2} \]LInf(Chebyshev Distance): The maximum absolute difference between corresponding elements. \[ L_\infty = \max_{i} |x_i - y_i| \]psnr(Peak Signal-to-Noise Ratio): A measure of signal quality often used in image processing, derived from the MSE. Higher PSNR indicates better quality (less distortion). Calculated based on the maximum possible value of the data and the MSE. \[ PSNR = 10 \cdot \log_{10} \left( \frac{MAX^2}{MSE} \right) \]

Using the sepal length samples again:

(stats/count= setosa-sepal-length virginica-sepal-length) ;; => 1

(stats/L0 setosa-sepal-length virginica-sepal-length) ;; => 1

(stats/L1 setosa-sepal-length virginica-sepal-length) ;; => 79.10000000000005

(stats/L2sq setosa-sepal-length virginica-sepal-length) ;; => 148.09000000000006

(stats/L2 setosa-sepal-length virginica-sepal-length) ;; => 12.16922347563722

(stats/LInf setosa-sepal-length virginica-sepal-length) ;; => 3.1000000000000005

(stats/psnr setosa-sepal-length virginica-sepal-length) ;; => 13.236984537937193Dissimilarity and Similarity

dissimilarity and similarity functions provide measures for comparing probability distributions or frequency counts (like histograms). They quantify how ‘far apart’ or ‘alike’ two data sequences, interpreted as distributions, are. They take a method keyword specifying the desired measure. Many methods exist, each with different properties and interpretations. They can accept raw data sequences, automatically creating histograms for comparison (controlled by :bins), or they can take pre-calculated frequency sequences or a data sequence and a fastmath.random distribution object.

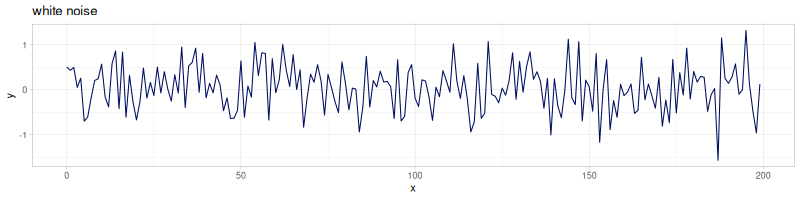

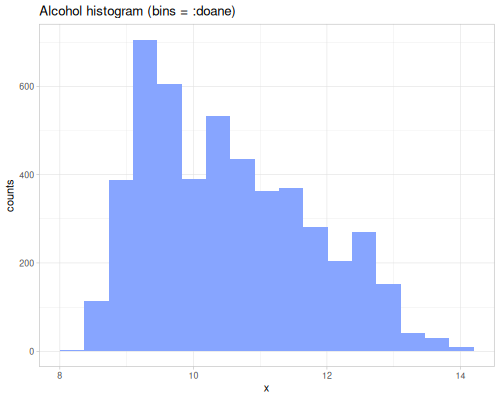

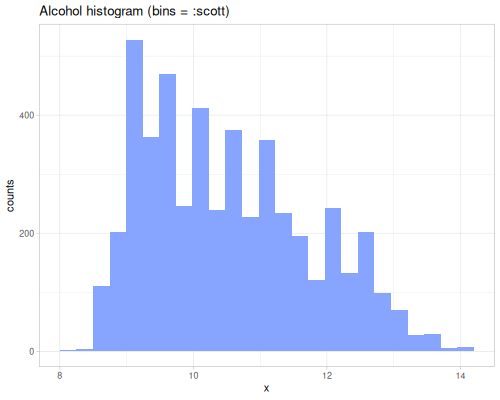

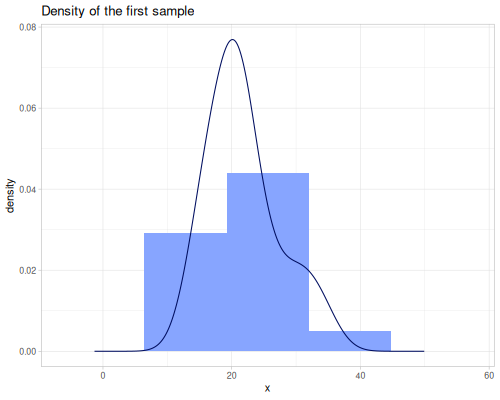

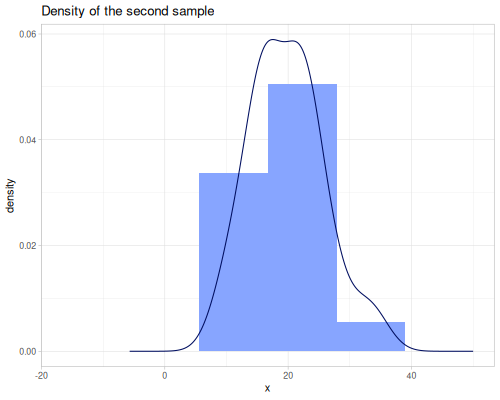

Parameters: