fastmath.optimization

Optimization.

Namespace provides various optimization methods.

- Brent (1d functions)

- Bobyqa (2d+ functions)

- Powell

- Nelder-Mead

- Multidirectional simplex

- CMAES

- Gradient

- L-BFGS-B

- Bayesian Optimization (see below)

- Linear optimization

All optimizers require bounds.

Optimizers

To optimize functions call one of the following functions:

- minimize or maximize - to perform actual optimization

- scan-and-minimize or scan-and-maximize - functions find initial point using brute force and then perform optimization paralelly for best initialization points. Brute force scan is done using jitter low discrepancy sequence generator.

You can also create optimizer (function which performs optimization) by calling minimizer or maximizer. Optimizer accepts initial point.

All above accept:

- one of the optimization method, ie:

:brent,:bobyqa,:nelder-mead,:multidirectional-simplex,:cmaes,:gradient,:bfgsand:lbfgsb - function to optimize

- parameters as a map

For parameters meaning refer Optim package

Common parameters

:bounds(obligatory) - search ranges for each dimensions as a seqence of low high pairs:initial- initial point other then mid of the bounds as vector:max-evals- maximum number of function evaluations:max-iters- maximum number of algorithm interations:bounded?- should optimizer force to keep search within bounds (some algorithms go outside desired ranges):stats?- return number of iterations and evaluations along with result:reland:abs- relative and absolute accepted errors

For scan-and-... functions additionally you can provide:

:N- number of brute force iterations:n- fraction of N which are used as initial points to parallel optimization:jitter- jitter factor for sequence generator (for scanning domain)

Specific parameters

- BOBYQA -

:number-of-points,:initial-radius,:stopping-radius - Nelder-Mead -

:rho,:khi,:gamma,:sigma,:side-length - Multidirectional simples -

:khi,:gamma,:side-length - CMAES -

:check-feasable-count,:diagonal-only,:stop-fitness,:active-cma?,:population-size - Gradient -

:bracketing-range,:formula(:polak-ribiereor:fletcher-reeves),:gradient-h(finite differentiation step, default:0.01)

Bayesian Optimization

Bayesian optimizer can be used for optimizing expensive to evaluate black box functions. Refer this article or this article

Linear optimization

Categories

Other vars: bayesian-optimization linear-optimization maximize maximizer minimize minimizer scan-and-maximize scan-and-minimize

bayesian-optimization

(bayesian-optimization f {:keys [warm-up init-points bounds utility-function-type utility-param kernel kscale jitter noise optimizer optimizer-params normalize?], :or {utility-function-type :ucb, init-points 3, jitter 0.25, noise 1.0E-8, utility-param (if (#{:ei :poi} utility-function-type) 0.001 2.576), warm-up (* (count bounds) 1000), normalize? true, kernel (k/kernel :mattern-52), kscale 1.0}})Bayesian optimizer

Parameters are:

:warm-up- number of brute force iterations to find maximum of utility function:init-points- number of initial evaluation before bayesian optimization starts. Points are selected using jittered low discrepancy sequence generator (see: jittered-sequence-generator:bounds- bounds for each dimension:utility-function-type- one of:ei,:poior:ucb:utility-param- parameter for utility function (kappa forucband xi foreiandpoi):kernel- kernel, default:mattern-52, see fastmath.kernel:kscale- scaling factor for kernel:jitter- jitter factor for sequence generator (used to find initial points):noise- noise (lambda) factor for gaussian process:optimizer- name of optimizer (used to optimized utility function):optimizer-params- optional parameters for optimizer:normalize?- normalize data in gaussian process?

Returns lazy sequence with consecutive executions. Each step consist:

:x- maximumx:y- value:xs- list of all visited x’s:ys- list of values for every visited x:gp- current gaussian process regression instance:util-fn- current utility function:util-best- best x in utility function

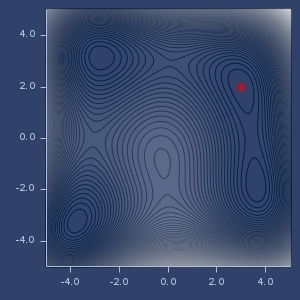

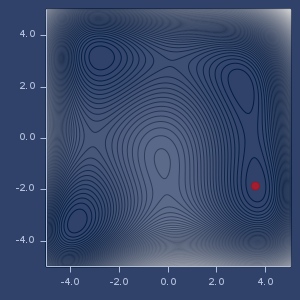

Examples

Usage

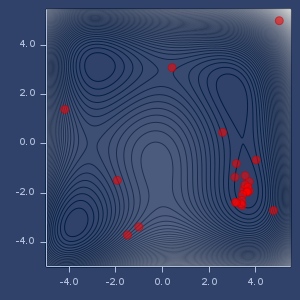

(let [bounds [[-5.0 5.0] [-5.0 5.0]]

f (fn [x y]

(+ (m/sq (+ (* x x) y -11.0)) (m/sq (+ x (* y y) -7.0))))]

(nth (bayesian-optimization

(fn [x y] (- (f x y)))

{:bounds bounds, :init-points 5, :utility-function-type :poi})

10))

;;=> {:gp

;;=> #object[fastmath.gp.GaussianProcess 0x75d60e4e "fastmath.gp.GaussianProcess@75d60e4e"],

;;=> :util-best (3.090683441434399 -2.5753581852302574),

;;=> :util-fn #,

;;=> :x (3.090683441434399 -2.5753581852302574),

;;=> :xs ((3.090683441434399 -2.5753581852302574)

;;=> (3.08137815793059 -2.5719514043209877)

;;=> (3.0722602683396554 -2.5678904559788704)

;;=> (3.044235559957177 -2.5577231457679956)

;;=> (3.0384751890334387 -2.5560644481228976)

;;=> (3.02528241113434 -2.5535878275661266)

;;=> (2.9981027723322393 -2.552134606201862)

;;=> (2.9807634731596298 -2.5532894285227052)

;;=> (2.946869247203799 -2.5608911643317596)

;;=> (2.924251050069772 -2.568771747913321)

;;=> (2.900173107918855 -2.5801060157145246)

;;=> [-1.6739008422610908 -4.089742177140744]

;;=> [-4.660923443946751 1.514333805921578]

;;=> [2.8970984517345197 -2.5816881258154507]

;;=> [0.4914033663171917 2.934117849525041]

;;=> [-2.20060990754021 -1.1375263955112973]),

;;=> :y -23.600366447617947,

;;=> :ys (-23.600366447617947

;;=> -23.892518063061576

;;=> -24.158798297850893

;;=> -25.0954726798367

;;=> -25.309545545667767

;;=> -25.853628789472012

;;=> -27.133264640601112

;;=> -28.043636673700355

;;=> -30.0588826168771

;;=> -31.540301005916447

;;=> -33.258478584934814

;;=> -215.82614043566036

;;=> -237.5360033352615

;;=> -33.48555288303808

;;=> -65.6332058306506

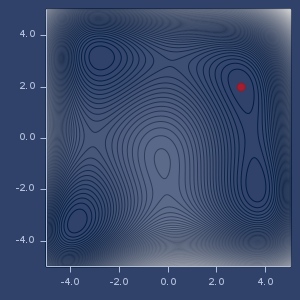

;;=> -115.72973921258156)} Bayesian optimization points

linear-optimization

(linear-optimization target constraints)(linear-optimization target constraints {:keys [goal epsilon max-ulps cut-off rule non-negative? max-iter stats?], :or {goal :minimize, epsilon 1.0E-6, max-ulps 10, cut-off 1.0E-10, rule :dantzig, non-negative? false, max-iter Integer/MAX_VALUE}})Solves a linear problem.

Target is defined as a vector of coefficients and constant as the last value: [a1 a2 a3 ... c] means f(x1,x2,x3) = a1*x1 + a2*x2 + a3*x3 + ... + c

Constraints are defined as a sequence of one of the following triplets:

[a1 a2 a3 ...] R n- which meansa1*x1+a2*x2+a3*x3+... R n[a1 a2 a3 ... ca] R [b1 b2 b3 ... cb]- which meansa1*x1+a2*x2+a3*x3+...+ca R b1*x1+b2*x2+b3*x3+...+cb

R is a relationship and can be one of <=, >= or = as symbol or keyword. Also :leq, :geq and :eq are valid.

Function returns pair of optimal point and function value. If stat? option is set to true, returns also information about number of iterations.

Possible options:

:goal-:minimize(default) or:maximize:rule- pivot selection rule,:dantzig(default) or:bland:max-iter- maximum number of iterations, maximum integer by default:non-negative?- allow non-negative variables only, default:false:epsilon- convergence value, default:1.0e-6::max-ulps- floating point comparisons, default:10ulp:cut-off- pivot elements smaller than cut-off are treated as zero, default:1.0e-10

(linear-optimization [-1 4 0] [[-3 1] :<= 6

[-1 -2] :>= -4

[0 1] :>= -3])

;; => [(9.999999999999995 -3.0) -21.999999999999993]

maximize

(maximize method f config)Maximize given function.

Parameters: optimization method, function and configuration.

Examples

Usage

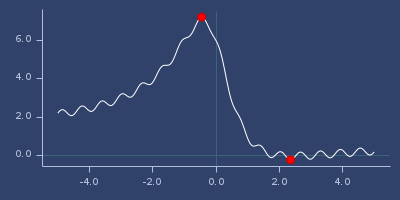

(let [bounds [[-5.0 5.0]]

f (fn [x]

(+ (* 0.2 (m/sin (* 10.0 x)))

(/ (+ 6.0 (- (* x x) (* 5.0 x))) (inc (* x x)))))]

{:powell (maximize :powell f {:bounds bounds}),

:brent (maximize :brent f {:bounds bounds}),

:bfgs (maximize :bfgs f {:bounds bounds})})

;;=> {:bfgs [(-0.452310691333493) 7.224689671203529],

;;=> :brent [(-0.4523106823170646) 7.224689671203529],

;;=> :powell [(-0.4522927913307559) 7.224689666542709]}maximizer

(maximizer method f config)Create optimizer which maximizes function.

Returns function which performs optimization for optionally given initial point.

Examples

Usage

(let [bounds [[-5.0 5.0]]

f (fn [x]

(+ (* 0.2 (m/sin (* 10.0 x)))

(/ (+ 6.0 (- (* x x) (* 5.0 x))) (inc (* x x)))))

optimizer (maximizer :cmaes f {:bounds bounds})]

{:optimizer optimizer,

:run-1 (optimizer),

:run-2 (optimizer [4.5]),

:run-3 (optimizer [-4.5])})

;;=> {:optimizer #,

;;=> :run-1 [(0.0) 6.0],

;;=> :run-2 [(-2.1369139581266254) 3.701187915447829],

;;=> :run-3 [(-0.6782829357849605) 6.651455012187882]} minimize

(minimize method f config)Minimize given function.

Parameters: optimization method, function and configuration.

Examples

1d function

(let [bounds [[-5.0 5.0]]

f (fn [x]

(+ (* 0.2 (m/sin (* 10.0 x)))

(/ (+ 6.0 (- (* x x) (* 5.0 x))) (inc (* x x)))))]

{:powell (minimize :powell f {:bounds bounds}),

:brent (minimize :brent f {:bounds bounds}),

:brent-with-initial-point

(minimize :brent f {:bounds bounds, :initial [2.0]})})

;;=> {:brent [(-3.947569586073323) 2.2959519482739297],

;;=> :brent-with-initial-point [(2.979593427579756) -0.20178173314322778],

;;=> :powell [(2.3572022329682807) -0.23501046849989368]}2d function

(let [bounds [[-5.0 5.0] [-5.0 5.0]]

f (fn [x y]

(+ (m/sq (+ (* x x) y -11.0)) (m/sq (+ x (* y y) -7.0))))]

{:bobyqa (minimize :bobyqa f {:bounds bounds}),

:gradient (minimize :gradient f {:bounds bounds}),

:bfgs (minimize :bfgs f {:bounds bounds})})

;;=> {:bfgs [(2.9999999998534097 1.99999999883261) 2.7385230842283264E-17],

;;=> :bobyqa [(3.5844283403693833 -1.848126526921083)

;;=> 1.1846390625694734E-19],

;;=> :gradient [(2.999999970870807 2.00000006031274) 5.809729042963462E-14]}With stats

(minimize :gradient

(fn* [p1__35743#] (m/sin p1__35743#))

{:bounds [[-5 5]], :stats? true})

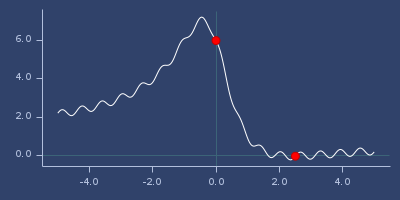

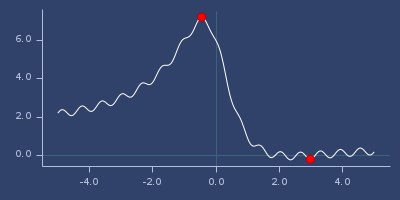

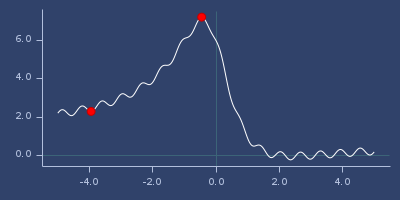

;;=> {:evaluations 22, :iterations 3, :result [(-1.5707963273466874) -1.0]}min/max of f using

:powelloptimizer

min/max of f using

:nelder-meadoptimizer

min/max of f using

:multidirectional-simplexoptimizer

min/max of f using

:cmaesoptimizer

min/max of f using

:gradientoptimizer

min/max of f using

:brentoptimizer

min/max of f using

:powelloptimizer

min/max of f using

:nelder-meadoptimizer

min/max of f using

:multidirectional-simplexoptimizer

min/max of f using

:cmaesoptimizer

min/max of f using

:gradientoptimizer

min/max of f using

:bobyqaoptimizer

minimizer

(minimizer method f config)Create optimizer which minimizes function.

Returns function which performs optimization for optionally given initial point.

Examples

Usage

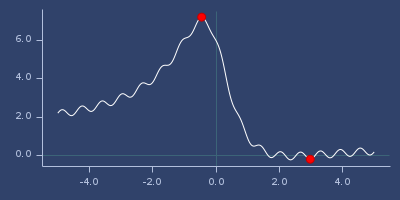

(let [bounds [[-5.0 5.0]]

f (fn [x]

(+ (* 0.2 (m/sin (* 10.0 x)))

(/ (+ 6.0 (- (* x x) (* 5.0 x))) (inc (* x x)))))

optimizer (minimizer :brent f {:bounds bounds})]

{:optimizer optimizer,

:run-1 (optimizer),

:run-2 (optimizer [4.5]),

:run-3 (optimizer [-4.5])})

;;=> {:optimizer #,

;;=> :run-1 [(-3.947569586073323) 2.2959519482739297],

;;=> :run-2 [(2.357114991599655) -0.2350104692683484],

;;=> :run-3 [(-4.570514545168775) 2.074718566761628]} scan-and-maximize

Examples

Usage

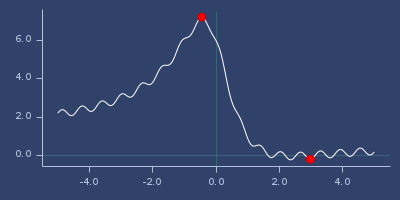

(let [bounds [[-5.0 5.0]]

f (fn [x]

(+ (* 0.2 (m/sin (* 10.0 x)))

(/ (+ 6.0 (- (* x x) (* 5.0 x))) (inc (* x x)))))]

{:powell (scan-and-maximize :powell f {:bounds bounds}),

:brent (scan-and-maximize :brent f {:bounds bounds}),

:bfgs (scan-and-maximize :bfgs f {:bounds bounds})})

;;=> {:bfgs [(-0.4523106925133572) 7.224689671203531],

;;=> :brent [(-0.4523106953149838) 7.224689671203531],

;;=> :powell [(-0.45227510869667575) 7.2246896527880455]}scan-and-minimize

Examples

1d function

(let [bounds [[-5.0 5.0]]

f (fn [x]

(+ (* 0.2 (m/sin (* 10.0 x)))

(/ (+ 6.0 (- (* x x) (* 5.0 x))) (inc (* x x)))))]

{:powell (scan-and-minimize :powell f {:bounds bounds}),

:brent (scan-and-minimize :brent f {:bounds bounds}),

:bfgs (scan-and-minimize :bfgs f {:bounds bounds})})

;;=> {:bfgs [(2.357114904769161) -0.2350104692684256],

;;=> :brent [(2.357114904820884) -0.23501046926842548],

;;=> :powell [(2.3571147985827405) -0.23501046926831126]}2d function

(let [bounds [[-5.0 5.0] [-5.0 5.0]]

f (fn [x y]

(+ (m/sq (+ (* x x) y -11.0)) (m/sq (+ x (* y y) -7.0))))]

{:bobyqa (scan-and-minimize :bobyqa f {:bounds bounds}),

:gradient (scan-and-minimize :gradient f {:bounds bounds}),

:bfgs (scan-and-minimize :bfgs f {:bounds bounds})})

;;=> {:bfgs [(-2.8051180926944563 3.13131252564036) 3.2116393279741417E-15],

;;=> :bobyqa [(-2.805118086981481 3.131312518269862)

;;=> 4.1058320675767663E-20],

;;=> :gradient [(3.5844283403327744 -1.8481265270314733)

;;=> 6.516574632562574E-20]}